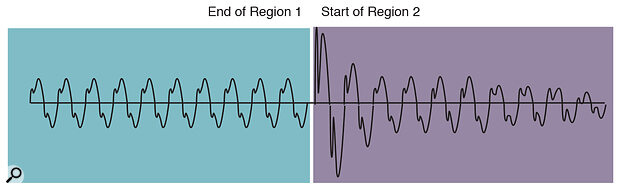

Figure 1: If an edit is made immediately before a drum beat, any glitch caused by less‑than‑perfect timing is likely to be hidden.

Figure 1: If an edit is made immediately before a drum beat, any glitch caused by less‑than‑perfect timing is likely to be hidden.

After last month's overview of the equipment and processes involved in compiling an album master from mixes, Paul White gets down to the business of sorting out wanted audio from unwanted... This is the second article in a three‑part series.

The first step in any album editing project is to load all the audio material you'll need for the session onto your computer's hard drive. As explained last month, unless you are using an analogue source, or intend to go via an analogue processor, transferring in the digital domain is to be preferred so as to avoid the quality loss that occurs when your signal makes an unnecessary trip via the A‑D converters of your sound card or audio interface. Working at bit depths in excess of 16‑bit and then dithering down to 16 bits as the final stage of processing is the best option from a quality point of view, but in reality, most work arrives in a 16‑bit format already.

DAT is still the most common source medium, but DAT recordings may either be at 44.1kHz or 48kHz depending on the model of recorder. Consumer machines tend to work at 48kHz only, while the more professional models are switchable between 48kHz and 44.1kHz. It is also not entirely unheard of for clients to turn up with a DAT tape on which the sample rates vary from track to track! My own solution is to use a hardware sample‑rate converter between the DAT machine and the editing system (commercial hardware units can cost as little as £150, though the quality of conversion is generally better the more you spend). Alternatively, you can record the audio in at its original sample rate, then use the sample‑rate conversion provided by your editing software to ensure that everything ends up as a 44.1kHz file — though in Sound Designer II, which I use, this takes so long the album will probably be out of date before it's released! If you don't get the sample rates right, you'll find that 48kHz material appears to record properly at 44.1kHz, but it will be approximately 10 percent slower and lower in pitch than it should be when you come to play it back.

Some software packages don't mind whether you record your songs in as separate audio files or as one long single file, though others insist on everything being part of the same file. I usually try to record everything I need into one file, and if the material is spread over several tapes, I simply pause the recording process while I change tapes. It's wise to ensure you have a few seconds more material at the start and end of each piece of audio than you'll eventually need (to allow you room to manoeuvre when editing). The most important thing to check before recording is that your software is set to external digital sync if you're coming from a digital source. It always surprises me that so much editing software actually allows you to carry on with the job having selected a digital source and internal sync — surely a simple warning message isn't too much to ask for? If you forget to switch to external sync, you'll end up with a file full of ticks, clicks and glitches, which means starting the whole job again.

Regions

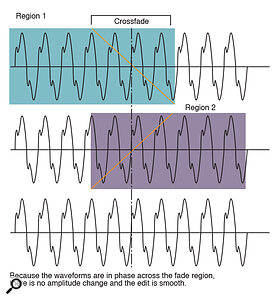

Figure 2: Ensuring that your regions start and end at zero crossing points does not guarantee a glitch‑free transition.

Figure 2: Ensuring that your regions start and end at zero crossing points does not guarantee a glitch‑free transition.

Terminology varies from one piece of software to another, but the first stage in editing invariably involves dividing the audio file up into regions. This is akin to chopping up an analogue tape into sections, then discarding what you don't need before splicing the pieces together. The main difference is that computer editing is largely non‑destructive, so although the user interface tells you you've created lots of little regions, the source audio file hasn't actually been changed. It's also possible to use the same region more than once, which you can't do with analogue tape unless you physically copy it.

If you're working with a client, try to explain the way your system works to avoid frustration. For example, I often find people saying they want to remove such and such a section, but my software doesn't think like that, it's only concerned with the sections you need to keep. So, when the client wants to remove section B, it helps if he or she knows that what they should really be doing is defining regions A and C on either side of it.

When it comes to defining the regions you want, the overview waveform of the entire file serves as a useful navigation aid, because at the very least you'll have a good idea where one song stops and the next one starts. Once you're in the right ball park, you can play the file until you hear the song start, then immediately press stop. Zooming in on the waveform display should show the cursor positioned a short way after the start of the song (you may even need to scroll the display back a little way to find the actual start if your reactions weren't quick enough!). The visual waveform display is generally a very accurate way of locating your song start edit point, unless the song starts with a fade‑in. Even then, you can zoom in on the display height so you can see where the silence stops and where the signal begins. It's usually quite obvious where the first sound of a song is, but to double check, place the cursor immediately before the place where you think the songs starts, then play the file from the cursor position. If you get a clean, immediate start, then you've picked the right place.

Sometimes, the first sound of a song isn't actually where you want to the song to start from — for example, there may be a guitar string squeak, a snare‑drum rattle or even a count‑in that you'd prefer to get rid of. It's in situations like these that having an editor with an audio scrub function is useful, as you can move back and forth across any section of audio at any speed, enabling you to correlate what you see on screen with what you're hearing. It isn't generally necessary to erase the unwanted material, just make sure the region start point comes after it, but out of habit, I tend to erase a few seconds back from the song start point just to keep things tidy. Be aware, however, that many editing packages actually change the file when you elect to silence something, so once you've gone beyond your one level of undo (or however many your package gives you), there's no way to restore the silenced audio if you made a mistake.

Before leaving the subject of song starts, it's also worth pointing out that a vocal intro may be preceded by a breath, and that taking out the breath may not always be the right thing to do. There are just as many artistic decisions as technical ones in editing, so let your ears decide what works best. Sometimes it's good to leave the breath intact but perhaps drop it in level by a few decibels.

Defining song ends isn't quite as easy because most songs have a little reverb at the end of the last note, which may itself sustain and decay over quite a long time. Using the vertical (amplitude) zoom facility usually makes it clear where the meaningful audio stops, though turning the gain up and using the scrub tool is generally just as effective. The majority of recordings contain a little background noise, so to keep things tidy, I tend to do a short fadeout starting just before the audio fades into nothingness and extending for a second or so. This ensures the song fades to complete silence.

One myth to get out of the way is that making edits at zero crossing points will guarantee no glitching — it won't. You'll only avoid a glitch if the waveform at one side of the edit flows smoothly into the waveform at the other side...

If you want to add a gradual fade‑out to a song, you'll need to make the fade time around 25 to 30 seconds if you don't want it to sound rushed. Any material remaining after the fade is best silenced, but before you do that, check that the fade sounds OK while you still have chance to undo it. Some packages offer a variety of fade curves, though the linear curve offered by SDII always seems reasonably natural. My own preference is to do fades after normalising or equalising, as my instinct tells me that this will be kinder to low‑level detail at the end of the fade, but on typical pop material, I have to admit there's no subjective difference.

Tweaking

Providing there's no editing to do within the songs themselves, the next task after identifying the regions that define the individual songs on the album is to ensure that each track has a consistent sound and level. This doesn't mean everything should be at the same level — you may have some slow, moody songs mixed in with rock or dance tracks — but they should still have a natural balance. Listen to the rhythm track and the vocal levels to get a feel for the relative balance of the songs, and if you're still unsure whether or not something is too loud, listen from the next room with the door open, just as you might while mixing, as this seems to focus the mind on balance rather than other issues.

If the songs aren't all recorded at a high enough level, you may need to normalise low‑level songs before continuing. Normalisation simply increases the gain so that the loudest peak in the song is at 0dB DFS (Digital Full Scale). After normalisation, you can turn down the playback gain for the track until it sits comfortably with the rest of the album. If the levels are right but there seem to be tonal differences between tracks, you may need to apply some EQ or compression — see the 'Mastering Matters' box.

The Nitty Gritty

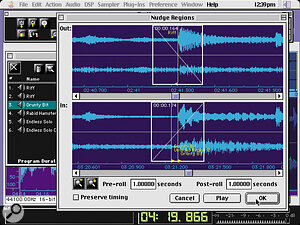

Figure 3: Crossfading between two practically identical signals can produce unexpected side‑effects.

Figure 3: Crossfading between two practically identical signals can produce unexpected side‑effects.

On a straightforward editing job, this may be all you need to do apart from compiling your playlist and creating a master CD. The fun starts, however, when you have to edit songs together from various sections.

Selecting the individual regions that make up a song can be done by marking start and end points on the fly, then making fine adjustments in the playlist until the timing is right. With pop music, it's generally best to try to get the edit points to coincide with the start of a drum beat as this provides a good visual landmark, and also helps hide any discontinuities that might arise when the two sections come from two different mixes. Figure 1 illustrates this simple edit. However, you'll eventually come across an edit where there's a piece of vocal running over the edit point, and the singer doesn't use exactly the same timing in both cases. In this case, the result of editing on the beat is that the timing of the backing track will be OK, but the vocal will have a noticeable edit in the middle of a word. Probably the best way to get around this is to set the initial edit points on the beat, then go into the playlist and nudge the edit (both the end of the first region and the start of the second one) backwards or forwards in time in small increments until you've moved the edit to a point in between words. Of course you may find that you now have no drum beat to hide the edit, but if you can manage to line up the edit with a hi‑hat beat or other percussive event, it may help. If all else fails, you can try a short crossfade, but as we'll see shortly this isn't foolproof either.

Trickier Edits

When editing classical music or other music with no obvious rhythmic edit points to act as landmarks in the waveform display, the best way to work is to mark up the regions on the fly (usually by hitting specific keys while the file is playing). Place the regions in order within the software's playlist, then loop around each edit point and nudge the end of one region or the start of the next until the timing sounds right. Only then should you worry about trying to disguise the edit.

As with the previous example involving two slightly different vocal performances, you may need to nudge the whole edit backwards or forwards in time until you find a point that produces an invisible mend; the final smoothing may have to be done using a short crossfade. The need to move edit points like this is one of the reasons for recording a few seconds more audio than you need at either end of each section.

If the edit doesn't coincide with a strong beat, you may find there's an audible glitch at the edit point. One myth to get out of the way is that making edits at zero crossing points will guarantee no glitching — it won't. You'll only avoid a glitch if the waveform at one side of the edit flows smoothly into the waveform at the other side, and if you look at Figure 2, you'll see that even if the waveforms at either side of the edit are identical, there are two possible scenarios, one of which will cause a glitch and one which won't. If you look at the diagram, you'll see why. In the first example, the waveforms either side of the edit are in phase, so the transition will be smooth, while in example two, the waveforms are out of phase, resulting in a discontinuity at the edit point. This will cause a click.

The usual solution to an awkward edit is to use a crossfade between the two regions, but even crossfades aren't foolproof. A crossfade involves fading one region out following the edit point while at the same time fading in the second region prior to the edit point — which is another reason for recording a few seconds more than you need at either end of each section. But the problem with a crossfade is that it is just that — a fading between two sounds — so for the duration of the crossfade, both sounds are audible in changing proportions, with the balance being equal in the middle of the crossfade. Unless the sounds are absolutely identical and in phase, you may hear a double‑tracking or chorus‑like effect during the crossfade, which is one reason to keep crossfades as short as possible. Furthermore, if there is a large phase shift between the sounds either side of the crossfade, you may hear a noticeable dip in level in the middle of the crossfade as shown in Figure 3. This is yet another reason to check that your edit points occur at zero crossing points and that the waveforms either side of the edit are in phase.

Avoid long crossfades over percussive beats, as you can end up with a flamming effect if the timing of the two beats isn't spot on. As a rule, a 20mS crossfade is long enough to prevent clicks, though a longer one may be necessary to smooth out an awkward transition.

Where the material either side of the crossfade is well matched (for example, from two takes of the same song, mixed similarly), keep the fades as short as is possible while still achieving a smooth edit. Where the material is completely different either side of an edit, for example two different pieces of music, or a decaying last note followed by a burst of 'spontaneous' applause, you can use as long a crossfade as you need — as the waveforms aren't in any way correlated, there won't be any phase cancellation.

In Part 3 I'll be looking at ways to deal with clicks, playlist compilation and CD burning. Until then, happy editing!

Mastering Matters

Fine‑tuning a crossfade between regions in BIAS Peak v2.

Fine‑tuning a crossfade between regions in BIAS Peak v2.

If you're dealing with a collection of songs which were recorded or mixed at different times, you may find you have tonal differences to deal with as well as differences in level. The trick here is to pick what you think is the best‑sounding song on the album (tonally, not necessarily musically), then use EQ to try to get the other tracks to sound similar. As ever, use EQ boost sparingly — you can go at it a bit harder with cut, but listen carefully for any hint of the sound becoming unnatural or nasal. Every EQ situation is different, but if you have a parametric plug‑in, try a little 15kHz boost with a 3‑octave bandwidth to add sheen and detail. Presence can be added by boosting at 4 to 6kHz. Bass sounds can be boosted at 80 to 90Hz using a 1 to 2‑octave width setting while boxy drums and instruments can be tamed by cutting at around 150Hz using a 1‑octave width setting. Over‑thick vocals can sometimes be improved by cutting at around 200 to 250Hz. Approach EQ very carefully and use your bypass button often to make sure you haven't gone too far.

Another useful mastering trick is to apply overall compression, but it's generally best to stick to very low ratios and low thresholds. I find that even a ratio of as little as 1.1:1 can make a huge difference in making a track sound bigger and more even. Auto attack and release times help, especially with material that is constantly changing in dynamics.

If you have a good separate limiter, you can also use this to make the mix louder by limiting the top 3 or 4dB of the signal. I like the Waves L1 limiter for this task as it sounds very transparent and automatically increases the signal level so that it peaks at the limiter threshold (also user adjustable). It's almost like normalising and limiting in one operation.