However much studio trickery is considered 'normal' in a genre, the unwanted side-effects of processing can rob your mixes of impact. But it doesn't have to be that way

Most of us are so accustomed to the side-effects of routine processing such as EQ and compression that we take them for granted. Indeed, some people will never have learned to identify them, but most will have experienced the cumulative effect: your carefully crafted mix inexplicably lacks definition and impact. To prevent that, I believe the good old KISS principle must be applied (Keep It Simple, Stupid!).

But by keeping production simple I don't mean being lazy or accepting sub-par results. Rather, I mean taking care to preserve the integrity and definition of the source sounds; making sure your processing doesn't damage them. You should still strive to achieve all the artistic goals of every project, of course, and it's fine to use processing to do that. But if you deliberately choose tools and methods whose side-effects cause the least possible amount of collateral damage, your mixes will sound better.

It's a simple idea in principle, but it requires you to understand and learn to recognise the side-effects of any processing you apply. In this article, I'll explain what these side-effects are, how they can compromise the integrity of the sources in your mix, how you can learn to listen out for and notice such damage, and what alternative recording/mixing tactics you can try to avoid these problems.

Natural Goodness

Underlying my approach is the idea that a mix should sound 'natural'. But what exactly does that imply? When trying to capture acoustic music, judging the effect of your engineering on the sound quality is relatively easy, as you have a real-world reference; you know what's 'natural' and if the result sounds that way you've done a good job. (For that reason, recording acoustic music is a great way to build your critical listening skills.) But the term can apply to less obvious styles too.

You could argue that in synthesis and electronic music anything goes; that no treatment of the signal sounds 'natural' or 'unnatural'. But anyone making such music knows this isn't true. It's common to render musical events that never took place acoustically, to transform recorded real events into something else, and to place events in 'spaces' that never existed. But we still perceive some such sounds as being more natural than others. The difference is down to how often we've heard similar sounds: mere exposure to sounds changes our frame of reference of what sounds natural. Just as audiences grew used to the sound of the overdriven electric guitar and new genres emerged in the '50s and '60s, so too the average clubber or rave-goer knows what an 808 kick or a Moog bassline should sound like. Not everyone can picture these sound sources in the way they can when, say, a hammer strikes a glass object, but they still attach a mental image to the sound. They recognise it as part of a musical style, and if it matches their expectation it sounds natural. This article covers all sound sources, whether their origin is acoustical, electrical or a combination of the two, though they do have to be embedded in the culture of your intended audience.

Note that there's no single ideal reference. You don't make every cello sound the same, because your reference of what a cello is has some variables. But a cello sounds like a cello, and if your rendering of it evokes the right image for the listener, you've done a good job. The same goes for techno kicks or stacked walls of organ-like synths: if the audience recognises what you intend, the production works.

We can widen our definition of 'natural' to encompass sound quality, for which it means something like: unprocessed, intact, or pure. Or better, faithful to the original. The listener's frame of reference makes it possible to think about being as faithful as possible to this reference. Whatever processing is used to arrive at the final sound, the threshold between what we do and don't accept as natural is crossed when it becomes unclear what mental image should be connected to a sound (is this a cello?). Or, worse, when the sound falls apart, and stops sounding like a single source.

Lyrical Intelligibility

This is neatly illustrated by a specific problem I'm noticing increasingly in heavily processed pop productions: despite vocals being mixed loud and proud, it's often harder to understand the lyrics than in productions that rely less on pitch processing and multiband compression. Going purely by the mix balance, I'd expect crystal-clear intelligibility, but the opposite is true.

I believe that the core of the problem is that the rendering isn't faithful enough to the original to evoke the right mental images. The producer's aim would obviously not have been to make the lyrics unintelligible, but rather to make the vocal tight in pitch and bright in timbre, and generally to lend it a more slick, polished appearance. The unintelligibility problem is an unintended side-effect of the processing used to accomplish those goals.

To understand what's going wrong, you must consider how we hear sounds in a mix. When listening to a vocal (in any language), you're trying to recognise patterns that a single vocal would typically produce and match them to your reference library of mental images. To do so, your brain attempts to separate all the spectral components the vocal produces from any surrounding sounds, and connect them into a single source image. The 'stream' of sounds this source produces is then screened for familiar language patterns. The process is impeded if you can't easily connect the different frequency components the vocal produces into a single stream.

As some processing can act to 'break up' sources (eg. it could lead you to perceive the highs and lows of a vocal as two separate entities), it can make it hard for the listener's brain to do its job. If you heard the voice in isolation, it would be easy enough to understand the lyrics — it could probably sound more natural, but you can understand what's being sung. But when you add in the accompanying instruments, your brain has to unravel which streams of information belong together and which don't. Imagine you were to divide a sentence between two people, each speaking alternate syllables — how much harder would it be to understand the sentence than when a single person pronounced the complete pattern? In short, your brain must work harder to separate the voice from the instruments in a mix before attempting to decipher the lyrics. And if the other elements have been similarly 'over-processed' it has to work harder still.

Note that I'm not describing the effect of masking, whereby we fail to detect parts of the sound because they're being obscured by louder tones in other sources. It only concerns the sequences of frequency components we can detect in the mixture and our ability to determine which have the same cause and which don't. A mix that makes this mental ordering process easy will have good definition, while a mix that makes it difficult tends to sound like a mush and be difficult to listen to, because it demands a great deal of 'brain power' to get to the information.

Seemingly 'innocent' use of processors such as filters, EQ and limiters can all too easily damage two sound properties that are very important for our ability to identify individual sources in a mix: transients and overtone synchronisation.

The Order Of Things

So what are the main sound properties that facilitate our ability to order musical information streams, and what sort of processing interferes with those properties?

Source location. In nature, frequencies that arrive consistently from exactly the same direction are likely to share the same cause. So any processes that mess with the stereo image (eg. cross-panning signals from multiple mics used on one source, and effects like stereo chorus or phasing) can make it harder for a listener to localise the source, and harder to piece together the 'stream' for that source, which leads to a blurring of information. This is useful if you want to make one sound harder to detect than another, but not generally useful for something that needs to sound well-defined and upfront.

Transients. These are very important to our ability to link different frequency components together, as sounds that start and stop at exactly the same moment appear to belong to the same source. When any processing weakens or blurs transients, it becomes more difficult to recognise and distinguish sources. In particular, assertive limiting or too much saturation will cause a loss of definition, as can any processing that messes with the time response (resonant filters and reverb, for instance). Even the structure of the music itself can cause difficulty; due to rhythmic synchronisation, the transients of multiple sources tend to overlap. This means you need more cues to separate sources playing in synchrony, like the individual voices in a chord.

The 'purity' of the source. Sounds that are the easiest to separate in mixtures are synthetic, monophonic sounds without modulation, that are rich in harmonic overtones and aren't treated with reverb or filters. Their frequency components are strongly related in time: once every cycle of the fundamental frequency, all the different overtones line up in phase, creating amplitude peaks in the complex waveform that occur at the rate of the fundamental frequency. The ear/brain can detect these pulsations across different frequency bands, and so determine which overtones belong to which fundamental, and thus which source. Even when overtones aren't harmonically related, they tend to align once every fundamental cycle because they share the same excitation mechanism, which moves at the fundamental rate. Look at the waveform of complex periodic sounds (like a piano tone or a diesel engine) and you'll spot these pulsations easily.

Any processing that disturbs the time relationship between overtones will decrease the pulsation and make the overtones sound less connected to a single source. Phase distortion in steep filters, layering sounds in unison (with slight detuning) or adding short reflections can all make overtone relationships harder to perceive. Some sounds are more resistant to these blurring effects than others. Synthesized pure tones or high-frequency sounds with sharp transients (eg. tambourines) can take a lot of processing before they blur, whereas a violin recorded at precisely the right distance in a concert hall can fall apart when applying only slight EQ.

Putting Theory Into Practice

I ran a small experiment to reveal the nature of these problems. First, I asked a group of music production students to play back a test signal through the vocal channel of the last mix they worked on and send the resulting audio to me. The students recorded two versions: one with all processing enabled, and one with any equalisers they'd used set in the neutral position. The test signal consisted of some tones, some impulses and a voice recording (made using a signal path with minimal phase distortion: a Schoeps MK2 pressure microphone and a transformerless Crane Song mic preamp and A-D converter, with its high-pass filter disengaged).

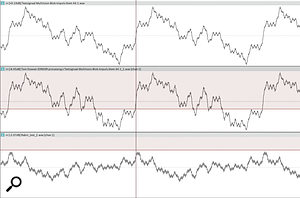

Diagram 2: The difference in impulse response between a vocal chain with good definition (middle) and one with poor definition (bottom). The original spike can be seen at the top of the image. For a meaningful comparison, any EQ in the vocal chains was set to neutral before recording the response. Any effect on display here is the result of filters in multiband compressors, tape simulators and up- and downsampling in plug-ins.

Diagram 2: The difference in impulse response between a vocal chain with good definition (middle) and one with poor definition (bottom). The original spike can be seen at the top of the image. For a meaningful comparison, any EQ in the vocal chains was set to neutral before recording the response. Any effect on display here is the result of filters in multiband compressors, tape simulators and up- and downsampling in plug-ins. Diagram 3: The difference between two chains on the phase relationship between the overtones of a complex harmonic signal. The top of the image shows the original signal. Note the high peaks occurring at the fundamental rate, when all the overtone phases align. The chain with good definition (middle) keeps these relationships intact. The one with poor definition (bottom) shifts the different frequencies in time, weakening the periodic peaks.

Diagram 3: The difference between two chains on the phase relationship between the overtones of a complex harmonic signal. The top of the image shows the original signal. Note the high peaks occurring at the fundamental rate, when all the overtone phases align. The chain with good definition (middle) keeps these relationships intact. The one with poor definition (bottom) shifts the different frequencies in time, weakening the periodic peaks. Diagram 4: The top waveform is the original speech recording. The middle one shows that signal processed through a vocal chain which provided good definition. The chain with poor definition (bottom) generated higher waveform peaks due to random shifts in phase, and has weakened the transients — some of the energy now arrives late, making it more difficult to recognise the start of the vowel.I then level-matched the files they sent back. The processing involved made this tricky (compression and distortion change the level in more complex ways than static gain does) and, as loudness normalisation didn't deliver accurate enough results here, I opted to balance the recordings by ear, as I would when placing a vocal in a mix. This way I could match the perceptual loudness between the different versions.

Diagram 4: The top waveform is the original speech recording. The middle one shows that signal processed through a vocal chain which provided good definition. The chain with poor definition (bottom) generated higher waveform peaks due to random shifts in phase, and has weakened the transients — some of the energy now arrives late, making it more difficult to recognise the start of the vowel.I then level-matched the files they sent back. The processing involved made this tricky (compression and distortion change the level in more complex ways than static gain does) and, as loudness normalisation didn't deliver accurate enough results here, I opted to balance the recordings by ear, as I would when placing a vocal in a mix. This way I could match the perceptual loudness between the different versions.

To establish how hard it would be to recognise the different versions of the voice in a complex mixture, I added pink noise, balanced at such a level that the original (unprocessed) recording was barely intelligible; boosting it by only a decibel more would have prevented me hearing what was being said. Then the students and I auditioned each level-matched, processed voice recording in the same way, and ranked them in order of least to most intelligible.

What did this show? The headline is that, even though some sounded a lot brighter and some made softer syllables relatively loud, every processed version was less intelligible than the original. There were a few other points to note: the vocal chains that delivered the most intelligible results featured only small amounts of EQ and only slow compression; small amounts of harmonic distortion had surprisingly little influence on the intelligibility; and the chains that performed worst were those that introduced large phase shifts (analysis of the impulse response gave that away), and whose fast compression, peak limiting or heavy saturation distorted transients. When listened to in isolation, though, all the processed voice recordings were clearly intelligible; only in the context of a dense mix was the loss in definition obvious.

Practical Implications

It seems clear to me that seemingly 'innocent' use of processors such as filters, EQ and limiters can all too easily damage two sound properties that are very important for our ability to identify individual sources in a mix: transients and overtone synchronisation. Indeed, any processing causes at least some form of signal degradation, yet the benefits of processing can massively outweigh the disadvantages. There's no point avoiding using an EQ or an amp simulator when there's an artistic need for them. The golden rule is that if what you hear in the context of the mix works well musically, you're doing a good job.

But it does make good sense to consider how many different tools you're using, and what purpose they serve. Now that computers make almost limitless processing possible this is more important than ever before. I've come across many mix projects where the engineer had stacked 12 consecutive plug-ins to fashion a certain vocal sound, when it would have been possible to achieve the same artistic goal using only two. It's my contention that, had fewer processors been used, the vocal could probably have been turned down without losing any definition or intelligibility, and that this would have allowed the other instruments to sound more impressive. There's no harm in using lots of plug-ins in the search for an approach that achieves your artistic goals, but once you've decided on that approach, consider cleaning up the chain you use to achieve it.

When To Worry?

Concerns about signal degradation have little place in the creative process, so I wouldn't advise you to think actively about the things I've mentioned in this article all of the time. When you feel it's easy to be swept off your feet by a piece of music, when you lose yourself in it even while working on it, you'll know you have nothing to worry about.

So I only focus on this side of things when I feel that the mix is suffering from a lack of definition. When I notice that I'm boosting more and more high end to make the mix sufficiently transparent, or I start to feel the need to reach for multiband compression, I see that as a sign that there may be problems with the arrangement, the stereo placement, or the signal quality of the source sounds and ask myself if my processing is perhaps doing damage that could do with unpicking.

Note that the likelihood of problems varies with the source. With delicate material that's already been at the mercy of room reflections it's easy to do more harm than good, whereas close-miked or synth signals can generally take quite a beating. In fact, lots of processing on the latter types of signals may even make the mix seem better defined! Also worth mentioning is that a mix can sound too defined; if you sacrifice the overall 'blend' to make individual components more distinctly audible, your mix won't sound like music anymore. You need to make a judgement about how defined each sound should be; it's probably going to be more critical for sources that you wish to be very clearly defined, like a lead vocal or solo instrument, and less so for those you deliberately want to be pushed back in the mix.

If you find it hard to resist piling on those plug-ins, you could try approaching your projects differently to make that less tempting. For example, since I realised that EQ isn't always the optimum solution, I've spent much more time fine-tuning the mix balance while using as little EQ as possible and find that this almost automatically results in more effortless-sounding mixes.

I'd like to leave you with one phrase that I think says the most about subjective audio quality: 'cognitive load'. How hard is it to listen to the music? How much effort is required to hear what's going on? Can you identify which sources are playing and where they're located? Ask these questions and answer them honestly. A high cognitive load, where it takes lots of effort to hear things clearly, is rarely a good sign. Only when you wish to present chaos as an effect does it make sense, and even in highly complex music your aim is that the listener can connect easily to that complexity. Good definition makes this possible.

About The Author

Wessel Oltheten works as a mix and mastering engineer in his Spoor 14 studio. He also teaches audio engineering at the Utrecht University of the Arts, and is the author of Mixing With Impact, a book about mixing that's available worldwide through Focal Press. For more details, visit www.mixingwithimpact.com

Disengage Filters!

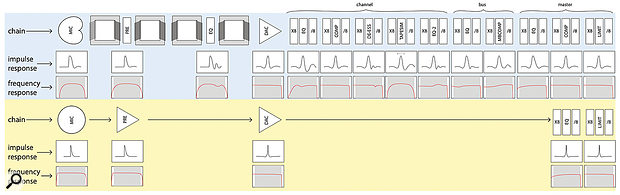

Diagram 1: A typical recording and mixing signal chain for a pop vocal production (top) versus one for classical music (bottom). Any component affecting the frequency response (ie. acting like a filter) also affects the time response, illustrated here by the impulse response.

Diagram 1: A typical recording and mixing signal chain for a pop vocal production (top) versus one for classical music (bottom). Any component affecting the frequency response (ie. acting like a filter) also affects the time response, illustrated here by the impulse response.

My little experiment suggested that the biggest culprit for loss of definition was the phase shifts caused by filtering, and once you've realised the harm these time-domain distortions can do you'll look very differently at a typical recording and mixing chain. As shown in Diagram 1, filters can be found in several places, including:

- The microphone. Directional (pressure-gradient) microphones roll off low and high frequencies more than omnidirectional pressure microphones. Many mics also include a switchable high-pass filter. (Directional mics may have a worse phase response than pressure microphones, but they still have the potential to sound more defined because of the rejection they offer).

- The mic preamp. All transformer-based models have a limited bandwidth, but some transformers/preamps have a higher bandwidth than others. Transformerless preamplifiers can perform better, but a lot depends on the design — designing high-gain amplifiers that have low distortion and high bandwidth isn't trivial. Like mics, many preamps feature a switchable high-pass filter.

- Analogue outboard/mixing desk. Again, these can feature input and output transformers. Any EQ or filter stage will also affect the frequency response. The degree of harm an equaliser will do depends on its design, what you use it for, and how radical your settings are. Stereo recordings of acoustic music are especially delicate; just a little phase distortion and the image can become blurred. On the other hand, a bone-dry hip-hop mix can often bear lots of assertive EQ before definition is lost.

- The A-D converter. These are restricted at the Nyquist frequency (half the sample rate). The lower the sample rate, the steeper the low-pass filter needed to let frequencies through and provide enough rejection above Nyquist to prevent aliasing distortion. The steeper the filter, the stronger the time-domain effects (phase shift or ringing).

- Plug-ins: as well as the obvious switchable filters and EQ, these can use filters for their core functioning, like the crossover-filters in a de-esser or multiband compressor. They also use filters in any up- and down-sampling stages (much like the filters in A-D/D-A converters). And analogue modelling plug-ins can cause the same filtering effects as analogue gear.

High-pass filters (HPFs) can be useful for removing subsonic rumbles, but they also change the phase of the signal — and such thuds and rumbles are rare in professional studios. So try not to use HPFs as a 'safety' feature, but instead to engage them only if there's something you actually wish to remove.I believe it's a mistake to engage high-pass filters only as a precaution, as in decent studios there's no low-frequency rumble, yet the phase shift of multiple high-pass filters can perceptually 'shrink' the audio — an effect that's audible much higher up than a filter's corner frequency. Yet you can easily end up filtering the low end in at least five positions in a typical setup, as shown in Diagram 1. If you bypass any unnecessary filter stages, and make sure the necessary filters (like transformers, or A-D/D-A conversion stages) are good quality, and have them operate as much as possible outside the frequency range you're interested in, you can achieve purer-sounding results.

High-pass filters (HPFs) can be useful for removing subsonic rumbles, but they also change the phase of the signal — and such thuds and rumbles are rare in professional studios. So try not to use HPFs as a 'safety' feature, but instead to engage them only if there's something you actually wish to remove.I believe it's a mistake to engage high-pass filters only as a precaution, as in decent studios there's no low-frequency rumble, yet the phase shift of multiple high-pass filters can perceptually 'shrink' the audio — an effect that's audible much higher up than a filter's corner frequency. Yet you can easily end up filtering the low end in at least five positions in a typical setup, as shown in Diagram 1. If you bypass any unnecessary filter stages, and make sure the necessary filters (like transformers, or A-D/D-A conversion stages) are good quality, and have them operate as much as possible outside the frequency range you're interested in, you can achieve purer-sounding results.

So next time you engage a high-pass filter, do so for a reason and then listen critically. Try to notice if the fundamental 'disconnects' from the upper frequency range (even when it isn't attenuated in level), making the instrument seem a touch smaller. And note that a low-shelf boost can restore some of a source's apparent size, which is a common mix move for me when faced with sounds that were recorded with unnecessary high-pass filtering. That's only possible when there are no problems with low-frequency rumble or electrical hum (so strive to make good recordings that extend well outside the frequency range you're interested in hearing).

Better Definition: Dos & Don'ts

Some of the tips below could help you achieve more definition and clarity in your mixes.

Don't... let problems guide your decisions. If you don't pay disproportionate attention to problems, you'll be less tempted to try fixing them regardless of the cost. Instead, base decisions on strengths instead of weaknesses — you'll strike a better compromise between your artistic goal and signal degradation. For example, say you've noticed that a voice has eight different kinds of 'sharp edges'. Try to solve all these problems using notch filters and de-essers, and whatever you achieve will be at the cost of some disintegration of the signal. Instead, focus on what it is you like about the vocal — perhaps it has an appealingly warm low-end — and you can build on that with some dark reverb which also distracts the listener from those 'problems'.

Do... invest in clean-sounding recording and monitoring. High-quality hardware or software challenges the myth that 'clean' recordings sound boring, sterile and cold. Indeed, clean can sound remarkably powerful: it can be bright without sounding harsh, it can be full-bodied without sounding muddy. The less distortion the gear introduces (due to filtering, phase shifts, limited resolution, rounding errors, jitter, aliasing, saturation, clipping etc.), the more of the source you hear. With recording and monitoring paths that are as clean-sounding as possible, you have a reference point, and you'll be able to tell when a sound calls for more colour via processing.

Limiters can be a really useful tool if you want to 'soften the edges' of a sound, but no matter how high in quality the specific tool, there's little to be gained in using them to increase loudness.

Limiters can be a really useful tool if you want to 'soften the edges' of a sound, but no matter how high in quality the specific tool, there's little to be gained in using them to increase loudness.

Don't... limit for loudness. In my vocal-intelligibility experiment, peak limiting was the second-most damaging process (after steep filtering). Not only does it soften the transients that are crucial to our sense of definition, but a heavily limited sound takes up more space in a mix because the transients can't rise as far above the surrounding sound when mixed at a similar loudness. Luckily, because loudness is now regulated on most playback platforms there's little need to limit for loudness — definition is the new loud! So try to use a limiter only when transients are drawing too much attention; if an instrument sounds overly pronounced and close, you can make it easier on the ears by softening some of its edges.

Do... use slow compression. Although limiting damages definition, leaving musical dynamics untouched does not guarantee a good mix. Instruments with a wide dynamic range can be very hard to balance with other sounds, and regulating each part's dynamics to make it work with the others is one of your main tasks as a mixer.

Think of a singer whose high notes are much louder than low ones because (s)he is stretching to reach them, or a synth with a modulating filter that's both brighter and much louder at times. These kinds of dynamics can be regulated most transparently using a fader, but a slow-acting compressor can also do a great job, saving you time. It's important that the compressor acts slowly enough that the transients remain untouched. By stabilising dynamics with a slow compressor, you prevent sounds from ever being too loud or too soft in the mix, but you're still allowing the transients to rise over the surrounding sounds.

But also consider which source's dynamics need manipulating. For instance, when a singer is loud it's often for a good reason, and by increasing the level of the accompanying instruments at that moment you can maintain a good balance between them and the vocal, increasing the sense of overall dynamic development without damaging to the vocal. If, on the other hand, a part is too dynamic, you're better off restricting the vocal's dynamic range than building your mix on top of it.

Another downside of compression is that a compressor can't know which parts are musically important. Thus it can boost softer elements that, musically, didn't need bringing up. This increases the risk of masking, and could require other sounds to be made more 'present' — by using more processing. I find that using manual automation in conjunction with compression can save you from entering such a 'processing spiral'.

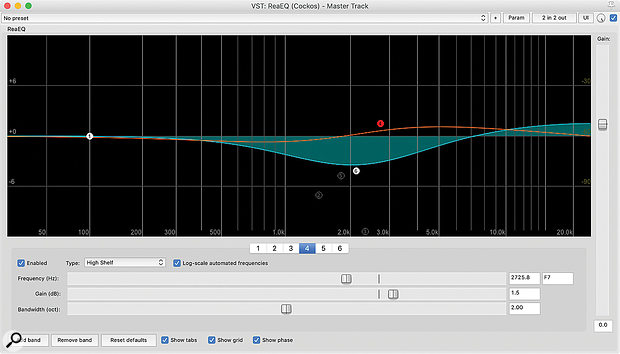

These EQ screens show the different effect on the phase response of three narrow cuts versus one broader dip. It's often better if you're able to focus on what's good about a sound, rather than attending to every little problem!

These EQ screens show the different effect on the phase response of three narrow cuts versus one broader dip. It's often better if you're able to focus on what's good about a sound, rather than attending to every little problem!

Do... simplify EQ. As with dynamics corrections, if you employ EQ gently it can improve definition instead of taking it away, and it all starts with a simple rule: avoid narrow bandwidths. That means, wherever possible, no steep slopes, no high Q factors and no high resonances. A 6dB/octave slope may not look much, but its time-domain response is far better than steeper filters.

If you want to improve definition with EQ, attenuate the part of an instrument that clashes most with other instruments, or emphasise the part you're after until it surfaces enough. These actions usually span an octave or two, so wide EQ bands suffice. As wide bands cause very little phase shift, the integrity of the instrument in the mix is better maintained. 'Zooming in' on problems using notch filters does the opposite: yes, disturbing frequencies are attenuated, but the sound can become harder to discern in the mix. Hence, I tend to do some 'cleaning up' after I've been on a search with EQ: if I find I'm taking out three different narrow bands in the high-mids, I'll try attenuating one broader area instead, or emphasising a broader area below. The result can be almost the same, but with better definition.

A good strategy for retaining definition in any multitrack production is to use as little EQ as is necessary on the most important sounds, other than to correct problems with the sounds themselves. The surrounding sounds can be EQ'd more radically. This way, the most important information is the easiest to dissect from the mix. Because well-defined sounds can be placed at a relatively low level in a complex mix and still be clearly audible, they leave more room in that mix for other sounds. This allows you to create denser mixes that still sound transparent — the Holy Grail!

Do... compensate for known anomalies. Phase is inherently connected to frequency response, so if something in the recording chain caused a change in frequency response (like the low-frequency roll-off in a cardioid mic), reversing this with EQ will correct the phase response. To me this explains why on some sources I tend to boost generous amounts of very low frequencies: I don't do it because I want to hear sound at those frequencies, but because it makes the frequencies above sound more coherent and less 'boxy'. This is probably a reason why the 'Pultec trick' of boosting a shelf at a very low frequency while simultaneously cutting just above works so well on cardioid vocal recordings. The boost compensates for the ultra low-frequency roll-off of the mic, while the cut reduces the proximity effect's low-frequency boost. The result is a flatter-sounding frequency and phase response. More complex phase issues can't be corrected in this way, because you don't know what caused them. Interference of multiple reflections can never be undone, for instance.

Mythbuster: Phase-shift

Many people mistakenly believe that the human ear isn't particularly sensitive to phase shifts of individual signals, because the relative timing of different overtones doesn't change the overall frequency content — when you randomly move the harmonics of an instrument using an all-pass filter ('phase rotator'), you'll hear little change to the frequency content. But evaluate the effect in the context of a dense mix and you can notice the instrument sounding less coherent.

Phase does matter: in the mix, it affects our sense of individual sources sounding 'defined' and, consequently, the clarity and impact of the whole mix. (Another good reason for always EQ'ing sources in the context of the mix rather than soloed.)

Upsampling

Digital processing causes side-effects, just as analogue processing does. For instance, many plug-ins internally increase the sample rate to increase high-frequency accuracy and to accommodate any additional high harmonics they may generate. Up- and downsampling require the use of steep filters. As these must be able to operate in real time and with low latency their design is usually a compromise and they can cause side-effects like distortion, phase shift, ringing or transient smear.

Every filter has time-domain effects of one sort or another. Regular filters change the phase relationships between overtones and 'ring' after the source at narrow bandwidths. Linear-phase ones retain the existing overtone phase relationships but at the cost of added latency and audible ringing. The ring is of the same duration but starts before the source, which can make it more perceptible. Which type of EQ is better in a given situation can vary, but the steeper the filter slope, the larger the side-effects, and the effects are cumulative. So the fewer upsampling plug-ins you stack, the more definition you should retain. Turning off upsampling will only replace one problem with another, as it can increase aliasing. Working at high sample rates will make a difference, as this lowers the filter steepness required in the up- and downsampling stages.