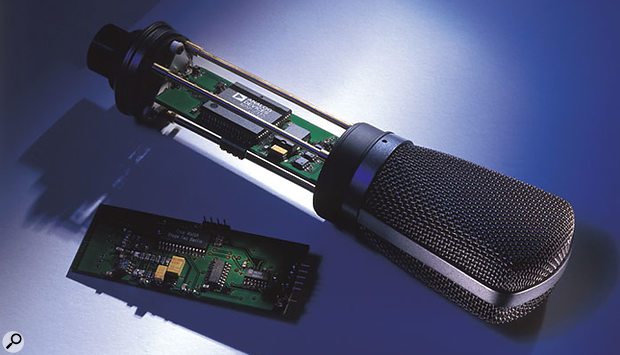

Beyer made the world's first 'digital' mic, the MDC100 (actually a digital/analogue hybrid) in 1998.

Beyer made the world's first 'digital' mic, the MDC100 (actually a digital/analogue hybrid) in 1998.

When is a digital mic really a digital mic? Cutting Edge looks at the hybrid digital/analogue technology we already have and suggests a radical new approach.

I was in one of the big consumer electronic shops at Christmas, where I overheard a conversation between two people. They were discussing the reasons why one digital radio should cost £90, when the one next to it — which appeared to have more features — only cost £34.

The answer was that the expensive one was a true Digital Audio Broadcast (DAB) receiver and the other just looked like a digital radio because it had a similar-looking LCD panel. The confused customers obviously thought that if the radio displayed digits, it was digital. Not their fault: how many people out there actually understand the intricacies of radio signal modulation as a transport layer for digital audio data? Never mind compression, multiplexes and metadata.

As readers of such esteemed journals as Sound On Sound, we've got a much better understanding of the question of digital versus analogue domains, haven't we? But I want to look at a couple of areas in this article where even 'experts' like us have to be a bit careful when we describe something as digital or analogue.

Now Hear This

We're pretty familiar now with the concept of an 'all-digital studio'. Widely available digital interfaces such as AES/EBU, S/PDIF, MADI, the resplendently named TOSLINK, and TDIF, to mention a few, all plug together nicely — usually via a digital mixing console — to facilitate that previously utopian idea, the digital music production workflow. The only slug in the feta salad is that we can't hear digits. And we're not likely to evolve any sort of direct optical digital input to our cognitive system — at least not for a few years yet. So the totally digital signal path from creation to user perception is, unfortunately, impossible. Impossible, and irrelevant.

Our ears are analogue devices. So are all our other senses. And so are the phenomena that our senses sense. When we sing, for example, that's analogue. For a sound to be a sound, it has to be analogue. You can, of course, use sound to carry digital information (modems spring to mind), but the sound used to carry the digital data — and this also applies to two people verbally exchanging telephone numbers — remains resolutely analogue.

None of this is too difficult to get to grips with. Until someone bio-engineers upgradable cognitive codec implants, we're always going to have to revert to the analogue domain to get any information whatsoever into peoples' awareness. Which brings me to digital microphones.

Ever since I came across digital audio, I wondered when we'd see the first digital microphone. In a way, it just seems obvious: given that we've got digital everything else, it's only a matter of time before we have digital microphones. But the more you think about the idea of a digital microphone, the more the concept just seems to evaporate. I'll explain what I mean by this.

Sound is definitively analogue. Which means — at the very least — that the pressure waves in air, or any other medium for that matter, are not divided up into discrete steps. Sound vibrations are not in any sense quantised, and if you measured the intensity of a sound vibration you would be very unlikely to find that it corresponded exactly to any kind of regular scale. What's more, the changes in pressure that make up the sound are just that: they don't represent the kind of 'higher' meaning that, for example, the numbers embedded in an audio CD do.

So whatever else a digital microphone might do, it has to incorporate some kind of 'analogue phenomenon' to 'digital phenomenon' converter. Maybe, then, that's what a digital microphone should be: a device with a conventional analogue transducer, connected at close quarters to an on-board analogue-to-digital converter — which seems perfectly reasonable. I can't see any reason why we shouldn't call a microphone that has an analogue capsule at one end and a digital audio cable coming out of the other end a digital microphone. Indeed, some people already do (www.beyerdynamic.com/com/product/index.htm).

I must admit, though, that I don't consider this to be a truly digital device. All we've really done is move the A-D converter a little bit closer, physically, to the microphone capsule. There's absolutely nothing wrong with that concept (and you can see the sense of minimising analogue cable runs, especially when you're dealing with such extremely low-level and noise-sensitive signals as you get from a typical microphone), but to me it points to nothing more than an incremental and somewhat debatable improvement in microphone performance. If the 'hybrid' digital microphone, as I'd prefer to call it, really is a massive improvement, you have to ask why everyone isn't making them. (Could be simply the additional cost of analogue-to-digital conversion, of course).

Digital Loudspeakers

There's a lot of symmetry in concept between loudspeakers and microphones, and you can sometimes use a loudspeaker as a rudimentary audio sensor. Pretty well all the arguments used above (apart from the optical ideas) could apply equally well to loudspeakers — and indeed there are what I call hybrid digital loudspeakers available which accept a digital audio feed and have on-board A-D converters.

A company called 1 Limited did appear to have built a genuinely digital loudspeaker — one where the digital-to-analogue converter is the very same thing that puts the air into motion. Apparently, the results could have been better with the prototypes, and the product seems not to have been pursued, but that's no reason at all to discount the technique. If someone were to pick this up and run with it, it could turn out to be a massive development in speaker technology.

Optical Delusions?

I suspect we're not going to see a huge improvement in microphone performance without adopting a radically different approach to the whole problem. You also have to wonder what aspect of conventional performance you actually could improve massively, when the devices we have now are so good anyway. Only when we started using 24-bit, high sample-rate recording did we get anywhere near being able to record the full dynamic range and subtleties of a good microphone, so in one sense digital recording technology has actually prevented the full use of the ultimate sound quality obtainable from such a mic.

It's important that you realise that I'm no expert on microphones. Whenever I talk to microphone manufacturers, I'm astonished at the art and precision that goes into designing and manufacturing their products. Far be it for me to suggest a better way to do it. But I've always had a nagging suspicion that you could use optical techniques to make a microphone, and that this could have advantages over the way it's done conventionally. The idea would be to shine a beam of light obliquely at a reflective diaphragm and somehow track its reflection, the position (or, perhaps, the intensity) of which would represent the amplitude of the incoming audio at an instant.

This approach would have two possible advantages: light beams don't weigh anything, so the only inertia involved would be that of the diaphragm itself. Not much different to conventional microphones in this respect, except that there would be no magnetic or electrostatic fields to 'damp' the movement. And, of course, a light beam would be immune to electromagnetic interference. Another quirky aspect of this technique would be that you could use the movement of the light beam to 'amplify' the audio signal, by simply moving the light beam detector further from it. The further away the detector, the greater the apparent movement (although not necessarily the meaningful content of the movement).

Needless to say, this has already been thought of.

The Digital Genome

It's worth remembering that the human genome is in fact a digital system. It's not binary, like most computers, but quaternary, with four possible constituent values. What we look like and who we are is thus determined by biological digital data.

A Few Steps Closer

Whatever the state of the art of optical microphones, they're still not digital. But we are coming to the point: would it be possible to use an optical technology to create a truly digital microphone?

For me, a device is truly digital if the output from it is quantised into discrete, meaningful steps. It wouldn't have to be a binary output, because it's easy to translate any kind of number scale into any other. Nor would it have to be responsible for its own sample rate, because that's strictly a matter of deciding how often you're going to read the quantised output, something that can be determined internally or externally to the device.

So how on earth do you get a quantised output from an optical microphone? A starting point could be a particular device from the world of video called a CCD, or Charge Coupled Device. Essentially it's the image sensor that you find behind the lens in modern video cameras. Contrary to popular belief, it's not a digital device. The output from a CCD is analogue, and can be quantised to whatever resolution is deemed appropriate. So, on the face of it, CCDs are not the answer for our digital microphone.

A magnified detail of a Charge Coupled Device, showing its grid structure.However, one attribute of CCDs that could well be useful is that they are typically made up in a grid structure. Now, remember that we're not necessarily trying to measure the intensity of the reflected light beam in the putative optical mic, but its displacement. So, by placing a CCD grid in the path of the reflected light beam, you could track the path of the light by monitoring the voltages generated from the CCD cells. This would produce a quantised readout that could be sampled at the desired rate, giving a pure digital output.

A magnified detail of a Charge Coupled Device, showing its grid structure.However, one attribute of CCDs that could well be useful is that they are typically made up in a grid structure. Now, remember that we're not necessarily trying to measure the intensity of the reflected light beam in the putative optical mic, but its displacement. So, by placing a CCD grid in the path of the reflected light beam, you could track the path of the light by monitoring the voltages generated from the CCD cells. This would produce a quantised readout that could be sampled at the desired rate, giving a pure digital output.

So far, so good. But there are a couple of things that still need attention. First of all, video CCDs are two-dimensional devices, whereas the movement of the reflected light beam is one-dimensional: a line, in other words. So a pixel array covering an area rather than a straight line is not ideal, not least because even high-definition arrays tend to max out at around 1920 pixels in a straight line. For even 16-bit audio we'd need over 65,000, and for 24-bit we'd need 256 times this number. So thus far our optical digital microphone is several orders of magnitude worse than we need it to be.

I don't claim to have the answers, or even to know whether I'm barking up completely the wrong tree (or, more likely, barking mad); but here are a couple of suggestions. First, you could at least double the apparent resolution of the CCD sensor by comparing the output from adjacent cells. It's unlikely that the reflected light beam would be focused on exactly one pixel: at least some of it would spill over onto adjacent cells. We could use this to our advantage by assuming that if, for example, two adjacent cells were equally illuminated, then the light beam must be around halfway between them. This would instantly double our resolution. You could probably also say that if, in a pair of pixels, one was illuminated more than the other, perhaps the position of the beam was a quarter of the way towards one and three-quarters of the way to the other. And so on. Maybe you could even devise a really complicated mirror that would guide the light beam all the way along one line of pixels, and then switch it to the next row as the movement intensified, thereby 'scanning' the entire area of the CCD.

At which point the feeble three-dimensional capabilities of my brain run out completely and I defer to the experts, who I'm sure will quickly put me in my place!