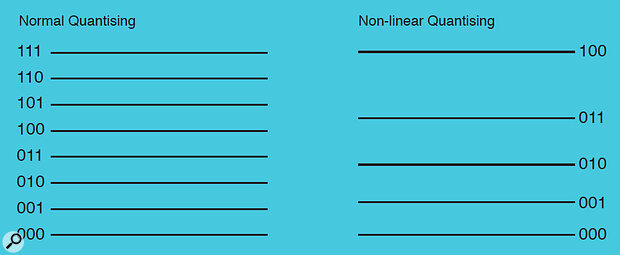

Figure 1: By arranging the quantising levels in a non‑linear way, low‑level signals appear to be quantised to high resolution. In contrast, high‑level signals may be encoded with a much lower resolution, but because the signal is loud it will tend to mask the increased quantising noise.

Figure 1: By arranging the quantising levels in a non‑linear way, low‑level signals appear to be quantised to high resolution. In contrast, high‑level signals may be encoded with a much lower resolution, but because the signal is loud it will tend to mask the increased quantising noise.

Though you might not realise it, the audio industry has employed data reduction strategies since the earliest days of digital systems. Hugh Robjohns explains the concepts and explodes some myths.

You might think of data compression and reduction as a relatively recent development in digital audio circles — certainly it's only in the past few years that project studio musicians have started to become aware of data reduction techniques, various forms of which are employed in Minidisc recorders and some of the currently popular personal digital multitrackers to fit large amounts of audio data on to compact storage media without compromising perceived audio quality. But even in the Jurassic period of digital audio evolution, simple data reduction techniques were employed to avoid stretching the available recording and transmission media — techniques such as reduced sample rates and low‑bit quantising. These days, data reduction is far more sophisticated, and allows us to achieve more with less — more channels, longer recording time, or better perceived resolution, with smaller discs, narrower tape, and restricted data‑rate channels.

Although the process of reducing the amount of data needed to represent a digital audio signal is commonly referred to as data compression, the more accurate description is data reduction. Compression implies a reversible process (you can expand the compressed material to restore the original) but the majority of data reduction strategies are lossy, meaning that data is thrown away irretrievably. A loss‑less system (and there are a few) is one where some data from the original signal is not recorded, but can nevertheless be recreated perfectly on replay — truly data compression.

Data reduction is not restricted only to digital audio; noise reduction processes such as the Dolby and dbx systems involve data reduction in the analogue domain. They allow audio signals with a wide dynamic range to be stored on a tape medium with a restricted range — just as we try to squeeze a large amount of digital audio data onto a medium with a restricted data transfer rate. Many of the techniques of analogue noise reduction apply equally to digital audio data reduction, albeit with the far greater sophistication allowed by complex DSP algorithms. Perhaps this is one reason why the masters of analogue noise reduction, Dolby Laboratories, also make some of the most highly regarded digital data reduction systems.

Reduction Strategies

Figure 2: With the binary counting system employed here, the higher‑ order bits mirror the sign bit when a quiet signal is being coded. Thus they are carrying redundant data and can be removed without damaging the audio signal, provided they can be replaced before replay.

Figure 2: With the binary counting system employed here, the higher‑ order bits mirror the sign bit when a quiet signal is being coded. Thus they are carrying redundant data and can be removed without damaging the audio signal, provided they can be replaced before replay.

Data reduction is used in digital camcorders, DVDs, laser discs, samplers, digital audio workstations, sample‑based keyboards, disk‑based multitrack recorders, radio and television broadcast networks, telephone systems, and many other applications besides. There is a bewildering variety of systems, many of which have fairly inscrutable names — eg. AC3, DTS, APT X100, MPEG, ATRAC, PASC, and G722. The last of these is an international telecommunications standard for limited‑bandwidth speech over the digital telephone network, and is only used in basic ISDN applications. The rest are intended for high‑quality stereo (and in some cases surround) audio, and allow the encoding of full‑bandwidth signals with wide dynamic range.

To give an idea of the efficiency of these systems, it's worth mentioning that loss‑less coding methods (in audio at least) cannot achieve much better than a 2:1 reduction in data rate, whereas the lossy systems can all exceed 4:1 — and some provide as much as 12:1. As a rule, the higher the reduction ratio, the more obvious and detracting are the processing artefacts, but the latest generation of MPEG2 processes running at 12:1 ratios are remarkably good, if not yet completely 'transparent'.

There are four approaches to reducing the amount of data from a linear Pulse Code Modulation (PCM) signal: reduce the sampling rate; reduce the quantising resolution (number of bits); remove redundant data; or remove irrelevant data. The first two approaches offer only modest data reduction if a respectable degree of quality is to be retained, so most advanced systems rely on the last two techniques.

Sampling Rate Reduction & Quantising Techniques

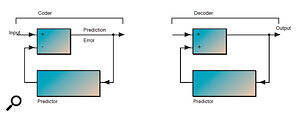

Figure 3: Simplified Predictive Coding structure.

Figure 3: Simplified Predictive Coding structure.

One of the easiest data reduction techniques is simply to accept a reduced audio bandwidth by allowing a lower sampling rate. Many music samplers, older effects units, and all the digital broadcast systems adopt this approach.

This is a lossy technique, because once the highest frequencies are removed they cannot be replaced. Obviously, the lower the sampling rate, the greater the data reduction, but the price we pay is audio fidelity — the trick is to balance the top‑end response against an acceptable economy of data. Broadcasters, for example, employ a 15kHz audio bandwidth for their analogue transmission systems, so 32kHz sampling for digital formats such as NICAM and DAB is entirely acceptable, and represents a 40% saving in data rate compared to a 48kHz signal (for more on why the sampling frequency should be at least twice the highest frequency you wish to record/transmit digitally, see part 1 of my series on digital recording in May's SOS).

When considering this approach for music recording applications, the sampling rate should be chosen very carefully to ensure that the harmonic structure of the source material is affected as little as possible.

Another simple technique is to reduce the number of quantising levels and thus the number of bits needed to describe each sample, although fewer quantising levels means more quantising noise and a smaller dynamic range. Some early digital effects units employed 8 or 12‑bit quantising, for example. This is another lossy technique, as low‑level detail in the original audio signal cannot be encoded in a low‑resolution system.

An alternative technique which offers a more acceptable balance between reduced quantising levels and increased quantising noise is to space quantising intervals non‑linearly . The telephone system adopts this approach — it employs 8kHz sampling (giving a 4kHz bandwidth sufficient for intelligible speech) with 8‑bit non‑linear quantisation. The quantising levels are closely spaced for quiet signals providing a low noise floor (equivalent to a 12‑bit system), but as the signal level grows so does the quantising interval. The noise level also increases of course, but since the audio is loud anyway, it will tend to mask the noise.

The technique works surprising well, although noise modulation can become noticeable if the louder audio signals don't contain a wide range of frequencies. A more serious problem is that digital signal processing of non‑linear quantised data is almost impossible, because a one‑bit increase for a small‑amplitude signal represents a very different level change to a one‑bit rise in a loud signal.

Redundancy & Predictive Coding

The solid line shows the minimum threshold of hearing against frequency. The dotted line shows how the threshold changes in the presence of three loud tones at 250Hz, 1kHz and 4kHz. Although perfectly audible on its own, a tone at 5kHz (shown as a vertical line) is below the new threshold, and will therefore be masked and inaudible.

The solid line shows the minimum threshold of hearing against frequency. The dotted line shows how the threshold changes in the presence of three loud tones at 250Hz, 1kHz and 4kHz. Although perfectly audible on its own, a tone at 5kHz (shown as a vertical line) is below the new threshold, and will therefore be masked and inaudible.

Another technique is to remove 'redundant data', ie. data that does not carry useful audio information. This may be done in a 'loss‑less' fashion — for example, by removing the higher‑order bits when a PCM signal is very small . In this illustration, the 'unused' bits mirror the most significant or 'sign' bit (indicating the positive or negative audio cycle). A quiet signal does not traverse many quantising levels, and the higher‑order bits remain static, not conveying anything useful at all — so they can be removed without affecting the quality of the original audio. However, some means of indicating the number of missing bits is necessary so that these can be reinstated before replay. In this way the data rate can be reduced significantly without altering the recreated audio signal at all. An everyday example of this approach is the NICAM television system, which employs this idea as part of its data reduction strategy.

Another loss‑less technique is to identify sample values which occur very often in the data stream. These can then be removed and replaced with something to indicate where the true value should be re‑inserted. The zero‑crossing point, for example, is a very frequent event which can be removed for recording but re‑inserted before playback, with significant potential savings.

A more advanced technique, strongly allied to the idea of redundancy, is predictive coding . This is, in principle, a simple idea which has the advantage of suffering very little processing delay (unlike the more sophisticated perceptual coding techniques discussed below), making it a popular technique for real‑time applications. Among the real‑world systems that employ predictive coding are G722, APT X100, and some versions of the DTS system used in the cinema, laser discs and DVDs.

Audio signals are largely repetitive, which is why predictive coding works. The technique involves a 'predictor' which has a knowledge of typical audio signal behaviour. By looking at the preceding audio signal, it tries to anticipate what will happen next and, because of audio's repetitive nature, the prediction is generally quite accurate. If this prediction is subtracted from the original signal, only a small difference signal remains, and this is recorded or transmitted as the data‑reduced result (see Figure 3).

The decoder uses the same predictor 'knowledge' to regenerate the predicted signal. The accuracy of this system is entirely dependent on the predictor algorithm — typically around 98% of the original signal is retrieved.

The technique works less well in anticipating essentially random signals in noise‑like sounds, or in predicting highly unpredictable (but crucial) transients. To improve the precision of the system, therefore, many coders use band‑splitting techniques (splitting the whole audio spectrum into four separate frequency bands). This allows multiple predictors to work on simpler band‑limited signals with far greater accuracy than they would if handling the complete signal.

One drawback of predictive coding is that since the decoder must use exactly the same predictor as the encoder, improvements to the 'intelligence' of the encoder can only be useful if your decoder is updated too — otherwise the accuracy of the decoded signal will actually suffer.

In general, this kind of system works very well, providing a typical reduction ratio of around 4:1. However, it can prove fatiguing to the listener over long periods, because damaged transient signals require more 'brain power' from the listener to interpret the sound. Multiple passes through the encoding/decoding process also lead to rapid loss of signal quality, very similar to that experienced when copying analogue cassettes.

Irrelevancy & Perceptual Coding

A loud signal will mask quieter ones which occur a short period before it has started, and have a longer‑lasting effect over quiet signals that occur after it has ceased.

A loud signal will mask quieter ones which occur a short period before it has started, and have a longer‑lasting effect over quiet signals that occur after it has ceased.

The final and most controversial strategy is to remove data which is irrelevant — ie. data representing sounds considered to be inaudible in the presence of the other elements of a complex audio signal. This relies on frequency and temporal masking, and is entirely dependent on the accuracy of a 'perceptual model' of the human hearing system (see the 'Perceptual Modelling' box).

Perceptual coding involves very precise audio filtering and analysis; processing that is only practical using digital techniques. A bank of filters divides the audio signal into many narrow bands (typically 32 or more) prior to processing, and a perceptual model then analyses the spectral content of the audio to determine which elements are likely to be completely masked (ie. irrelevant) and can therefore be discarded. The remaining audible signals are re‑quantised with a low resolution just sufficient to put the quantising noise below the masking threshold in each frequency band.

Temporal masking is calculated by dividing the signal up into blocks of samples (typically around 10 milliseconds in length) and analysing each block for transients which will act as temporal maskers. Most systems vary the length of the block to take advantage of both backwards and forwards masking (explained in the 'Perceptual Modelling' box elsewhere in this article).

Perceptual coding's complex digital filtering and spectral analysis, combined with the process of treating the audio in blocks, can create significant time delays in the audio signal. A complete encode/decode path can exhibit anything between 20 and 200 milliseconds of delay, which often causes serious problems in real‑time applications.

The decoder is much simpler than the encoder, as it does not require any perceptual modelling knowledge at all. It simply has to decode incoming data back into the original filter bands and re‑quantise the data to conform with a standard PCM format (based on codes embedded in the data), before re‑assembling the data into a composite output signal. Upgrades to the perceptual model in the encoder will automatically result in better quality from every decoder, purely through refinements to the process of deciding which bits of the signal are irrelevant.

MPEG1 and 2, PASC (used on Philips' Digital Compact Cassette), ATRAC (used on Sony's MiniDisc), and Dolby's AC3 are all systems which use perceptual coding as their primary means of determining irrelevancy in complex audio signals. However, they can all work at a variety of sample rates, they all employ requantisation to reduce the number of bits per sample, and they all remove some degree of redundancy in the signal too (see the 'Perceptual Coding Processes' box for more on these).

Good Or Evil?

Figure 4: Simplified Perceptual Coding structure (based on MPEG2).

Figure 4: Simplified Perceptual Coding structure (based on MPEG2).

A great deal has been written and said about data reduction systems, much of which has suggested that they are an unnecessary evil. This is not the case: provided the data reduction system is appropriate to the application and is used sensibly, it is a useful tool that allows better results than would otherwise be possible — for example, longer recording times, smaller recording media, more tracks, or whatever.

This is not to say data reduction is suited to every situation, but digital recording with most modern data reduction systems will provide better quality than most semi‑pro analogue recorders, especially cassette multitrackers.

Multitracking is a specific case where digital data reduction offers profound advantages. Since each track is carrying relatively simple signals which include a great deal of irrelevancy and redundancy, data reduction systems can work very effectively in allowing more tracks to be squeezed onto a particular medium with negligible side effects. Data reduction systems usually only reveal their weaknesses with very complex signals, so you might prefer to record your final mix on a linear PCM format, but data reduction can offer more benefits than drawbacks for the multitrack recorder.

One of the biggest problems with current data reduction systems is in coding stereo (or surround) material. This is primarily because many perceptual models are not sophisticated enough to cope with 'stereo unmasking'. This is a phenomenon whereby, although a quiet signal in one channel might be masked by louder elements in the same channel, in the context of stereo monitoring its spatial position (determined by the relative levels between the two channels) effectively unmasks it, revealing it as a separate and identifiable signal.

Imagine, for example, a rhythm guitar panned three‑quarters towards the left, behind the rest of the instrumentation in a complex mix. The perceptual coder might decide that the (quiet) guitar in the right channel is masked by everything else going on, although it is sufficiently loud in the left to remain audible. Consequently, the guitar might be removed from the right channel as part of the data reduction with the result that, when listening in stereo, it will appear to be positioned hard left instead of three‑quarters left. In practice, it is more likely that some frequency components of the guitar (for example harmonics) will be removed while others are retained, and so the image of the guitar will become blurred. Although this is perhaps an exaggerated example, I hope the point is clear: data reduction systems can impose instability in stereo images if they are not very carefully designed.

One solution is to treat the signal as M‑S (middle and side) components rather than L‑R (left‑right) components. This takes advantage of the fact that most loud signals sit in the centre of the stereo image (middle in M‑S) and are therefore common to both channels, leading to a lot of redundancy. By dealing with stereo in this way, a greater proportion of the data rate can be allocated to the side signals that convey imaging information. This is often called Joint Stereo Mode.

The Bottom Line

Data reduction systems necessarily reduce the amount of data, so it stands to reason that multi‑generation processing will lead to quality loss. The MPEG algorithms seem to be slightly less prone to this, but it remains a general problem with all systems. Things are worst when different data reduction systems are cascaded, in which case the quality deteriorates very quickly indeed. The symptoms usually become apparent after two or three generations in the form of vague stereo imaging, noise modulation, a harshness to the sound, aliasing, and increased background (quantising) noise.

In general, it is wise to avoid recording a complex mix on a data‑reduced format if any subsequent processing or copying is envisaged. It would also be sensible to avoid recording stereo source material on data‑reduced systems wherever possible, but single mono sources (eg. individual tracks on a multitrack) will not be noticeably affected by data reduction.

Help!

For more detailed explanations of some of the more complex terms and concepts touched on in this article (such as PCM, quantising, sample rate and bit resolution, to name a few), check out Hugh Robjohns' ongoing series on the basics of Digital Audio, which began in SOS May '98 and continues on page 222 of this issue.

Perceptual Modelling

When our auditory systems analyse sound, our brains do not treat the audio spectrum as a continuum. Rather, we perceive sound through around 25 distinct critical bands of varying bandwidths (at 100Hz the critical band is about 160Hz wide, but at 10kHz it is 2500Hz wide). A loud sound within one critical band will tend to mask quieter sounds within the same band, a phenomenon called frequency masking. Although our hearing is incredibly perceptive of simple signals in isolation, in the presence of complex sounds it effectively runs out of 'hearing resources' and so can only perceive the most dominant parts at any particular moment in time (see diagram above).

For example, the hum from a bass guitar amplifier is inaudible while the guitar is playing, although quite evident on its own. Similarly, tape hiss is inaudible in the presence of full‑range music, but obvious between tracks.

The second element of perceptual modelling is temporal or time‑masking, in which a loud sound affects our perception of quieter signals both before and after it (see the diagram at the bottom of this box). A quiet signal that occurs 10‑20 milliseconds before a louder one, for example, may be masked by the louder signal — this is called backwards masking. The squeak of a kick‑drum pedal might be plainly audible on its own, but can be masked by the presence of a much louder bass drum thump which happens a few milliseconds later. The hearing mechanism also takes time to recover from a loud sound, and this creates a masking effect which extends up to 100‑200 milliseconds after the masking signal has ceased — this is called forward masking. The length of the masking is related to the amplitude of the masking signal.

Perceptual Coding Processes

- MPEG1 & MPEG2

MPEG is an abbreviation of 'Motion Picture Expert Group' — an international body set up in 1988 to define digital audio and video data reduction systems. MPEG1 is an elaborate perceptual coding specification with three subdivisions of increasing complexity. Layer I is the simplest, offering typically a 4:1 reduction in data rate with a

32‑band filter bank. Layer II is essentially the same thing, but employs more complex spectral analysis of the input signal. This allows more accurate perceptual modelling and thus greater data reduction. Layer III goes further and incorporates even more sophisticated techniques such as varying filter bank bandwidths to better simulate the critical bands in human hearing, and non‑linear quantising to increase the efficiency of the data reduction.

MPEG2 is an extension of MPEG1 providing multi‑channel surround sound capabilities such as 5.1 channels, although other arrangements are also supported. The original MPEG2 was designed to be fully backwards compatible with MPEG1 systems, although a non‑backwards compatible version (MPEG‑2 NBC) has recently gained approval for DVDs and broadcast applications.

- PASC

Precision Adaptive Sub‑band Coding is derived from the MPEG1 Layer I audio data reduction system. It is used in the Philips Digital Compact Cassette (DCC) and operates at a compression ratio of 4:1.

- ATRAC

Adaptive TRansform Acoustic Coding is the system employed by Sony on their Minidisc as well as in the SDDS cinema surround sound format. It offers a 5:1 data reduction ratio in the case of Minidisc and employs the equivalent of 52 filter bands for spectral analysis and re‑quantisation. The size of the sample blocks is varied dynamically between 11.6 and 1.45 milliseconds according to the nature of the audio signal to accommodate temporal masking. ATRAC has now gone through many revisions since its launch, and the latest versions are extremely good.

- AC3

This is Dolby's third data reduction process (hence the name) and is already widely employed in laser discs, DVDs, digital television broadcasts, and

SR‑D/DSD cinema presentations. It differs from the other systems mentioned here in that it was designed from the outset to accommodate

multi‑channel audio formats with data rates ranging from 32 to 640 kilobits per second per channel depending on the application.