The next evolution of Bluetooth contains a number of innovations that should appeal to consumers and professionals alike. And it’s coming to a device near you.

When it comes to successful digital technologies, few can top Bluetooth, the wireless data and audio streaming standard that was named for some reason after an ancient Danish king with really bad dental hygiene. In the past five years, over 23 billion Bluetooth devices have been sold: a mind‑boggling amount of gear, equating to roughly three devices for every human on the planet. Bluetooth audio streaming devices, such as headphones, earbuds, headsets, portable speakers and TVs, comprise some 28 percent of that total (1.3 billion units last year), much of the rest being smartphones and non‑audio data transmitters like computer keyboards, mice, and FitBits.

In the wake of this spectacular success, the Bluetooth Special Interest Group (SIG) has begun publishing the draft specifications for Bluetooth LE Audio. Enough information is already available for manufacturers to get started on designs, and the full spec should be approved by early 2022. This is a brand‑new standard intended eventually to supersede the Bluetooth we know so well, and which is now dubbed Bluetooth Classic.

As Nick Hunn, CTO of WiFore Technology and one of the major developers of Bluetooth LE Audio puts it, the goal of Bluetooth LE Audio is to provide a “set of tools that will cope with any wireless audio application — any combination of music and voice — that anyone is likely to come up with for the next 10 to 20 years”. For those of us in professional music and audio production, this is exciting news. Not only will Bluetooth LE Audio allow audiences to hear and share our work in much higher quality, but the new standard is so flexible that it may very well spark new ways to conceive of sound‑centric art and entertainment.

Nick Hunn: "The goal of Bluetooth LE Audio is to provide a set of tools that will cope with any wireless audio application — any combination of music and voice — that anyone is likely to come up with for the next 10 to 20 years."

Into The Jaws Of Bluetooth

Simply put, Bluetooth is a short‑range (10 metres) wireless communication standard that operates over the 2.4MHz frequency band. There are two basic kinds of Bluetooth. What’s now called Bluetooth Classic was developed for telephone and audio transmission, which were conceived as separate use cases. Bluetooth Low Energy (LE) was originally intended for transmitting small amounts of data, such as keyboard and mouse information to a computer or sensor data from health and fitness devices to your smartphone. “Bluetooth LE was never designed to transmit audio,” explains Nick.

‘Profiles’ are Bluetooth‑speak for code that defines possible applications and use cases: they specify the general ways that Bluetooth devices communicate with each other. The first audio profile that Bluetooth developed was Headset, which was soon replaced by Handsfree to accommodate car connectivity. It was intended for wireless, single‑sided headsets in call centres or cordless phones at home.

Then in 2006, Bluetooth released another audio profile, A2DP, which allowed for higher‑quality audio transmission. It took 10 years, but once audio and video streaming caught on, people wanted to listen to music and watch movies everywhere. Sales of wireless earphones exploded and Bluetooth established itself as the all‑but‑universal wireless audio streaming standard for mobile devices. “I did a graph looking at [the growth of] Spotify subscribers against sales of Bluetooth headsets,” Nick says, “and they track almost identically.”

Today, Bluetooth Classic has become, well, a little long in the tooth. The Handsfree and A2DP profiles “don’t really work together,” Nick says, because “the Handsfree and A2DP profiles were designed without anybody ever really thinking that you’d want to swap between them.” For example, if you’re listening on your AirPods to some desert blues and a phone call comes in, classic Bluetooth has to go through considerable hoops just to stop playing the song and take the call. “It’s not a clean solution,” he said.

Also, Nick continues, “We have nothing [in Classic Bluetooth] that supports a separate left and right earbud. All the stereo [earphone and earbud] solutions you see on the market are all proprietary ways of trying to cope with A2DP.” The Bluetooth Classic audio spec has other limitations that became apparent as Bluetooth usage spread far and wide: it uses a fairly significant amount of energy, doesn’t fully support simultaneous bidirectional streaming, is rather slow and, except for proprietary solutions, permits only one device to be connected to another device at a time.

The Big Hack

Around the same time that sales of headphones using Bluetooth Classic went through the roof, Bluetooth Low Energy acquired audio streaming capability. And therein lies a tale.

Many hearing aid companies provide expensive, proprietary remote controls to wirelessly adjust the volume and program presets of hearing aids. They also sell expensive, proprietary devices that stream landline phone calls directly to hearing aids. But once everyone started using smartphones, no one wanted to lug around these extra gadgets. They wanted to use their phones to change volume and take phone calls, just like people with wireless earbuds.

There was one major problem: hearing aid batteries are extremely small, and Bluetooth Classic’s energy requirements would quickly drain them. So, Apple (and eventually others) essentially hacked Bluetooth LE for, as Nick explains, “a very simple bi‑directional audio stream” that provided wireless audio connectivity for hearing aids. The sound quality was limited in terms of bandwidth but it worked and met an important need. ‘Made for smartphone’ hearing aids were an instant hit. And that’s when something remarkable happened...

Nick Hunn: The hearing aid industry sat down with the Bluetooth SIG and said, ‘Look, things are changing. We want to connect hearing aids, but we think there is a bigger market for this.’

Universal Design

It’s rare that the needs of people with disabilities are central to the development of a technology intended for mass use. Yet meeting their needs often leads to products that benefit everyone, a concept called Universal Design. That is exactly what occurred when, according to Nick, “The hearing aid industry sat down with the Bluetooth SIG and said, ‘Look, things are changing. We want to connect hearing aids [via Bluetooth LE], but we think there is a bigger market for this.’ Sound today is being used in a whole variety of different ways that need something a lot more flexible than the monolithic approach [of Bluetooth Classic].”

Originally, hearing aid companies wanted “to gain better performance and battery life”, recalls Chuck Sabin, Senior Director of Market Development for the Bluetooth SIG. But as discussions ensued, it quickly became clear to many audio companies in the Bluetooth SIG that a new protocol which not only ran on very low energy but also met the needs of hearing aid users would be of great interest to everyone. And so, work began on the new standard: Bluetooth LE Audio. “This was our opportunity to think about this holistically, to build a new architecture for the next generation of audio with new device types, high‑quality audio at low bit rates using less power, and new location‑specific audio services.”

Low-latency Codecs & Shiny New Features

Bluetooth LE Audio extends the wireless audio capability of Bluetooth LE in several important ways. For a deep dive into the fascinating details, take a look at the ‘Cracking The Codec’ box. But briefly...

1. A new set of low‑latency codecs was developed by Fraunhofer specifically for Bluetooth LE Audio, called LC3 (mandatory for all LE Audio applications) and LC3plus. These are not your Dad’s codecs. Compared with SBC and aptX — two codecs used in Bluetooth Classic that date back as far as the ’70s and ’80s — LC3 requires much lower energy but can deliver audio up to 24‑bit/48kHz; the high‑resolution LC3plus can even go to 32/96. Extensive listening tests conducted by Fraunhofer and Bluetooth confirm the transparency of high bit‑rate LC3 coding. According to Nick Hunn, LC3 “really is a major leap forward in terms of a state‑of‑the‑art codec”.

2. Bluetooth LE Audio provides ‘multi‑stream’ signal‑synchronisation capability with latency potentially down to 25ms, both of which are considerable advances over Bluetooth Classic. LE Audio supports not just a simultaneous left and right earbud — which solves the single‑stream problems inherent to A2DP — but permits an arbitrary number of synchronised audio channels (dependent upon bandwidth). In other words, with Bluetooth LE Audio, not only are multiple language translations possible in a single wireless stream, but even multichannel audio, 5.1 surround, and Dolby Atmos speaker systems can be catered for.

3. Bluetooth LE Audio provides a suite of bi‑directional audio sharing technologies. One simple example: instead of handing your friend an earbud when you want to share some music with her, you’ll simply tap a button on your phone and the music will now stream to her own earbuds as well as yours. But that’s just for starters. Unlike Bluetooth Classic, which allows only one device to connect at a time to another device, Bluetooth LE Audio’s ‘one‑to‑everyone’ broadcast capability can be used, say, for private listening to separate TVs at sports bars or elsewhere.

In fact, the broadcast potential of LE Audio is far greater than simply short‑range audio transmission. The effective broadcast capacity of LE Audio extends much further than Bluetooth Classic (as much as 30 to 50 metres, even further if multiple transmitters are deployed). This capability, combined with exceptional sound quality, will make it possible to use LE Audio not only for next‑gen assistive listening at concerts and cinemas, but also personalised audio for anyone with LE Audio‑enabled earbuds. Think silent disco or your own high‑res music mix at a pop concert: all doable with the new standard.

The effective broadcast capacity of LE Audio extends much further than Bluetooth Classic (as much as 30 to 50 metres, even further if multiple transmitters are deployed).

Advanced Uses Of LE Audio

The SIG is imagining Bluetooth LE Audio will be used for personal music sharing, announcements in airports, assistive listening, and similar consumer uses. But LE Audio is such a flexible and powerful standard that, like MIDI, it has the potential to create brand‑new use cases and maybe even product categories far beyond the designers’ original intent.

Can Bluetooth LE Audio and LC3 be used for professional audio production? It’s quite possible. Wireless headphones with sound quality rivalling wired models are quite conceivable, as are wireless monitor systems for personal studios. LE Audio may also find a place in location sound for film, especially for scenarios where very small mics and transmitters are needed. Because LE Audio is multichannel, it may even be possible to use it with multiple wireless microphones. As for the new codecs, Alex Tschekalinskij, the engineer who worked on the LC3 and LC3plus codecs, reveals: “We had one exciting request from a service that allows you to create and play music together via an app or website. It’s basically online music jamming. The service can be used by musicians who want to rehearse or produce music online. LC3plus offers low‑delay modes and a dedicated high‑resolution mode, so it would be a perfect fit!”

The ready availability to the entire public of high‑quality, multichannel, wireless audio also suggests possibilities for new kinds of interactive drama and film. Nick Hunn notes: “You’ve got the potential to have 10 or more tracks,” that can be streamed simultaneously. It would be entirely possible to create a new type of immersive cinema‑in‑the‑round in which, say, part of the audience faces front, listening on their earbuds to some sci‑fi baddie berate his army before the great battle for galactic control while, at the same time, a different part of the audience faces to the rear, tuned into the good guys’ plans to defeat them.

Whether any of these or currently unimaginable new equipment and media get developed is anyone’s guess. But what’s certain is that in the years to come, Bluetooth LE Audio will open up numerous new ways to use wireless sound.

Cracking The Codec

To learn how the LC3 and LC3plus codecs work, I got a tour from Alex Tschekalinskij, a software engineer in the Low Delay Audio Communications Group at Fraunhofer, who helped develop them.

What exactly is a codec? A lot of data gets slung through the air during wireless audio transmission, and that takes a lot of energy. To conserve battery power, developers have worked out clever algorithms that compress the audio data to a manageable length for transmission (ie. encode it) and decompress (decode) it when it is received. This ‘encode/decode’ process is called a codec.

A good audio codec has to accomplish two neat tricks: minimise complicated computing (which takes energy and time), and do so in such a fashion as to maintain good sound quality. The Low Complexity Communication Codec (LC3) does just that, supporting sample rates up to 48kHz. LC3plus extends the capabilities of LC3 with somewhat more elaborate handling of the data stream that allows for sampling rates up to 96kHz, lower total harmonic distortion, additional low‑delay modes and features that come in handy in difficult transmission environments.

LC3/LC3plus are frame‑based codecs. They can look at 7.5 or 10 milliseconds worth of samples, analyse those samples, and calculate a way to compress the information in those samples so they can be broadcast more efficiently. LC3plus offers an additional 2.5ms frame duration.

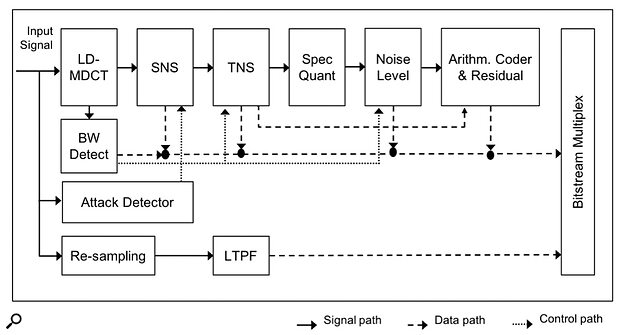

Bluetooth LE Audio codec block diagram.

Bluetooth LE Audio codec block diagram.

Encoding begins in the Low Delay Modified Discrete Cosine Transform module (LD‑MDCT) which is “basically a very widespread time‑to‑frequency transformation used in perceptual audio coding. This is where the codec’s time delay occurs,” notes Alex. “The total algorithmic delay of the codec is the sum of the frame duration, say 10 milliseconds, and the lookahead from the encoder side contributed by the MDCT (2.5 milliseconds for most cases). That adds up to a total delay of 12.5ms for the 10ms frame duration. With LC3plus, the total algorithmic delay can be as low as 5ms for the 2.5ms frame duration.”

The LD‑MDCT feeds a bandwidth detector (BW Detect) which does exactly what it says on the tin. “If you configure the codec to work at 32kHz but someone is using a legacy 8kHz [wireless phone] handset, this could potentially lead to artefacts,” said Alex. “The bandwidth detector can detect such band‑limited signals, and basically control other tools to avoid problems.”

The frequency components generated by the LD‑MDCT are passed to the Spectral Noise Shaper (SNS), where they are quantised and processed. Alex described the SNS as an algorithmically complex tool that is implemented efficiently with DSP (digital signal processing) and which “maximises the perceptual audio quality by shaping the quantisation noise so that it’s minimally perceived by the human ear.”

Next, the Temporal Noise Shaping module (TNS) “reduces pre‑echo artefacts for signals with a sharp attack,” Alex explains. Codec designers test the quality of the encoding with recordings of castanets. These have sufficiently sharp transients to make pre‑echo artefacts apparent, which the TNS (in conjunction with another module, the Attack Detector) can eliminate.

After spectral and temporal noise shaping, the Spectral Quantiser “calculates a global gain for a single frame and then quantises the spectrum with this global gain. The Spectral Quantiser works in an iterative way and estimates the number of bits required to encode the quantised spectrum later in the arithmetic encoding.”

At lower bit rates, spectral quantisation inevitably creates ‘spectral holes’ which sound like robotic bird chirps. These ‘birdies’ are fixed with the Noise Level/Filling module that uses a pseudo‑random noise generator to fill the holes.

To further reduce coding noise at lower bit rates in frames with pitched or tonal information, a Long Term Post Filter is applied. At higher bit rates this tool is turned off during decoding.

Generic Audio Framework

Once audio has been encoded by LC3, it can be streamed to receiving devices which essentially reverse this process and turn it back into sound. Implementing and controlling the entire process of wireless streaming is the business of Bluetooth LE Audio. Unlike Bluetooth Classic, which has monolithic profiles that do one thing only, LE Audio provides, as Nick Hunn puts it, two levels of profiles that form “a toolbox of things to set up audio streams and then tear them down or change them.”

Bluetooth LE Audio is built upon the Bluetooth Core. To drastically simplify a spec that runs over 3000 pages, the Core consists of the Bluetooth radio and the basic framework that enables a Bluetooth device (Classic, LE, or both) to wirelessly send and receive. The core is where the physical layer and the link layer resides. Introduced in the Core for Bluetooth 5.2 (we’re now at 5.3) were ‘isochronous channels’. Isochronous (ie. ‘occurring at the same time’) channels serve as the basic technology for the new synchronisation and broadcast features of Bluetooth LE Audio.

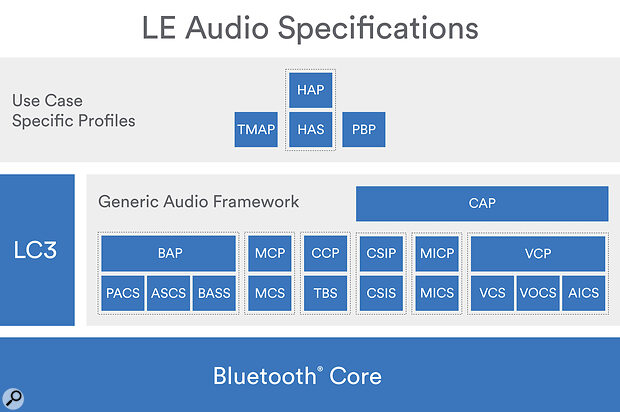

Diagram showing Bluetooth LE Audio's Generic Audio Framework.

Diagram showing Bluetooth LE Audio's Generic Audio Framework.

Above the Core, Bluetooth LE Audio provides two levels of profiles. The Generic Audio Framework provides, according to Nick, “anything that we think is a common function across more than one use case”. Designers implement what they need for a specific use case and don’t activate what they don’t need. The use case profiles define a higher level of function, specifying the mandated and optional features available in the Generic Audio Framework required to meet a specific use case.

Jeff Solum is the Wireless System Architect at Starkey Labs and a member of the Audio Architecture and Hearing Aid Working Groups at the Bluetooth SIG. An avid amateur pilot and barbershop quartet crooner, he patiently walked me through the Generic Audio Framework and the use case profiles.

The Generic Audio Framework for Bluetooth LE Audio — the audio functions available for any use case — contains both services and profiles. “Services have both characteristics and control points,” Jeff said. ”The profiles interact with the characteristics and control points in services to do useful things. In addition, profiles can interact with multiple services.”

The Basic Audio Profile (BAP) just to the right of LC3 in the diagram interacts with three services. The Published Audio Capabilities Service (PACS) lists which codecs and sampling rates are available. The Audio Stream Control Service (ASCS) “talks to the Bluetooth Core to set up the isochronous channels,” Jeff explains.

The Broadcast Audio Scan Service (BASS, pronounced like the fish, not the guitar) is used to efficiently look (scan) for broadcast streams to connect to. “Scanning is kind of a heavy burden on power consumption,” says Jeff, “so it may not be efficient for a pair of earbuds to scan. A phone would have more capability [than earbuds] for doing such things. Let’s say you walked into a health club or a sports bar. There may be 10 TVs in there and it might be hard for an earbud without a user interface to sort out which one you want to listen to. So BASS will show you [a list on your smartphone]. It’ll say, well, I’ve got CNN here or ESPN sports or whatever it is. And it might even tell you what language it’s in or who’s playing.” BASS also allows the use of encryption. “If you go to a cinema, you might use your phone to scan in a QR code that would give you the encryption key to listen to an encrypted broadcast specifically for Cinema 3 [and not Cinemas 1 or 2].”

The Media Control Profile and Service (MCP and MCS, respectively) provide controls for the playback of streaming media. The MCS defines numerous possible characteristics for a media player, from basic functions like play, stop, rewind, and playback speed to the display of media type and artwork. The MCS defines which features will be implemented on a specific device and how a user will interact with them.

The Call Control Profile (CCP) interacts with the Telephone Bearer Service (TBS). “The TBS is really rich and much more capable than the old Bluetooth handsfree profile,” said Jeff. “You can determine whether you want to listen to Skype or your GSM phone network and you can even have multiple bearers (telephone providers) join. You can have a Skype call join with your GSM phone call if you want.” The CCP can provide very simple functions — answer a call, say — or, in a car, it can be set up to provide a rich voice‑assistant‑based interface to answer calls, put them on hold, or forward them.

The Coordinated Set ID Profile (CSIP) and Coordinated Set ID Service (CSIS) define devices to be parts of a set and treat them as a single device. This simplifies the synchronisation of a stereo pair and creates a better stereo image than classic Bluetooth.

The Microphone Control Profile and Microphone Service (MICP and MICS) provide basic controls (mute and gain change) for microphones on a Bluetooth LE Audio device like a hearing aid or hearables.

The Volume Control Profile (VCP) interacts with three services. The Volume Control Service (VCS) controls volume on, say, the earbuds rather than on the source device (like a smartphone or computer). “The reason for that,” Jeff explained, “ is that we can send a full dynamic range through the encoder on the source side. If we turn down the analog signal on the encoder, we’re giving up some of the fidelity because the encoder really works best if that signal is, say ‑12dBFS. The Volume Control Profile would be on your smartphone and would always send out the same line level and then you control the volume at the [ earbuds or hearing aids] giving up no fidelity whatsoever.” The Volume Offset Control Service (VOCS) allows you to control left‑right balance and the relative mix between audio signals such as ambient mics and streaming audio on a hearable. The Audio Input Control Service (AICS) selects between different audio streams — live mics or streamed audio from a TV — and allows you to mute them.

Finally, serving as an overall controller for all these profiles is the Common Audio Profile (CAP).

Moving on to the use case profiles, which Jeff describes as “kind of like a pick‑list.” These comprise a list of capabilities chosen from the Generic Audio Framework that can be used for specific use cases. To conform to a use case profile, “you have to pick certain mandatory things from a list. And then there are certain optional things you can add to it.” Currently, there are three use case profiles available.

The Telephony and Media Access Profile (TMAP) provides a list of mandatory and optional capabilities for devices like smartphones, TVs, headsets, earphones, and hearables. Among the mandatory TMAP elements are support for sampling rates up to 48kHz as well as smooth switching between, say, music streaming, and an incoming phone call.

The Hearing Access Profile (HAP) and the related Hearing Access Service (HAS) provides a more basic list of functions — for example, designers won’t want to set up a hearing aid to forward calls, something more easily done from a smartphone. But HAP does include the capacity to make adjustments to a hearing aid program (boosting the treble, for example, when in a noisy cafe) and then saving the settings to a memory. The minimum mandatory sampling rates that a HAP hearing aid must support are 16 and 24 kHz; higher rates are possible but optional.

Finally, there is the Public Broadcast Profile (PBP) intended for audio sharing, large venue private and assistive listening, and simultaneous translations of spoken audio. Jeff explains: “You can have multiple channels in one wireless stream. With PBP, you have to support at least one channel that everyone can listen to.”

That word “everyone” is one more bow to Universal Design. If, for example, you create a Bluetooth LE Audio‑enabled TV with high‑end audio, you must also provide at least one wireless channel that is compatible with lower sampling rates that any LE Audio device — including small low power headphones or hearing aids — can access. PBP also contains highly sophisticated filtering capabilities. For example, a public address system in an airport could, using PBP, broadcast a dozen separate channels of audio, each in a different language. As someone walks through a concourse, a person from Korea could set her smartphone to detect only Korean‑language airport announcements.