Last month, Paul Wiffen explained how he heard about mLAN — a new data‑transfer protocol which will allow us to send audio, MIDI, and even video down one FireWire connector. This month, he finds out from Yoshi Sawada of Yamaha's mLAN development team how the system is likely to work in practice. This is the second article in a four‑part series.

Things are clearly moving on apace with mLAN; new developments have taken place even since the first instalment of this series appeared last month. Those who read that article will remember how I marked Apple's inclusion of the standard in OS X for both MIDI and digital audio as a very significant step forward, and how I pointed out that the only thing that could really prevent the standard catching on now would be a total boycott of mLAN by the other major manufacturers. Well, I am happy to report that at Summer NAMM in Nashville, Korg announced an EXB‑mLAN optional interface for their new Triton Rack (see picture). Although clearly a prototype (the label marked 'prototype' gave it away really), this interface demonstrated Korg's will to encourage the fledgling standard. This is another major step, and ranks in significance, in my mind, alongside the 1983 NAMM show, when both Sequential Circuits and Roland showed synthesizers with MIDI for the first time.

What's So Great?

Korg's recently announced mLAN option, shown here in the back of their new Triton Rack. Hopefully, Korg will be the first of many manufacturers to endorse mLAN.

Korg's recently announced mLAN option, shown here in the back of their new Triton Rack. Hopefully, Korg will be the first of many manufacturers to endorse mLAN.

Some people I have spoken to since the first instalment of this series said that while they grasped my enthusiasm for mLAN, I didn't make it clear enough just why it is so much better than previous interface standards. To help with this, here are a few comparisons which may help you appreciate the differences in performance compared to other, more familiar interfaces.

As we wouldn't really need mLAN if it weren't for multi‑channel audio transmission, let us compare mLAN with existing digital audio interfaces. The most commonly used are AES‑EBU at the professional end of the industry and S/PDIF at the consumer end (although this distinction is becoming increasingly blurred). For the purposes of this comparison we can regard them as delivering the same performance, ie. stereo transmission (their bandwidth limit) from point‑to‑point (ie. the output of one device to the input of another) over each cable connection. Even though ADAT Optical and TDIF transmit more channels (eight), they are still point‑to‑point protocols. In contrast, mLAN can deliver dozens and dozens of audio channels to any device anywhere on a network.

Perhaps a fairer comparison might be with a computer interface like the Universal Serial bus (USB), which many of you may have encountered by now on Macs or PCs. The transmission speed of USB is 12Mbps (megabits per second). IEEE 1394 or FireWire, onto which mLAN is piggybacked, can be set to run at 100, 200 or 400Mbps, ie. between eight and 33 times faster. The service interval of FireWire (ie. how often a message comes across) is 125µsecs, eight times faster than the 1msec of USB. But it is not just a question of speed. USB (like SCSI or the old Mac serial interface before it) has to be managed by a computer, so you have an enforced hierarchy with a single computer as host and numerous peripherals dependent upon it for control. mLAN is self‑managing (it doesn't require all actions to be initiated from a single controlling device) and is peer‑to‑peer (industry jargon meaning that all connected devices have an equal status on the network). This means that you can initiate transfers from whatever connected device is most convenient, be it mixer, synth, or computer. This makes life a lot easier if you have more then one person involved in the network: the musician can do what he needs to do at the keyboards, the programmer at the computer, and the engineer at the mixing desk or effects unit.

Meet Yoshi Sawada

To get a deeper insight into how mLAN actually functions and what we, the users, might expect to get in the way of performance from it, I spoke with Yoshi Sawada of Yamaha Corp in Los Angeles. Yoshi is a key member of the mLAN development team, and was at Summer NAMM in Nashville to promote Yamaha's newly announced licensing arrangements the mLAN technology. I began by asking him how long mLAN had been under development.

"Yamaha were actually looking at the possibility of a music networking protocol before the IEEE 1394 FireWire interface appeared in 1995. We had been studying various hardware connection possibilities for some time but when we looked at the 1394 protocol, we quickly came to the conclusion that that the hardware would be the best physical interface to use. It is ideal being able to put audio, MIDI, controller and file data, and even timecode on a single cable. It also makes it very easy to interface with computers, as FireWire is a recognised standard and is on most Macs now being built. On the PC, the DV [Digital Video] application will ensure a very high takeup of FireWire by PC integrators. In Japan, where the ownership of DV camcorders is widespread, FireWire has proved to be one of the most important features consumers look for when they buy a PC, and the same is starting to happen in the West.

"As far as a protocol for music and audio was concerned, we decided that producing our own chipset would be the best solution because of digital audio's very specific requirements. Although the I/O medium is the same on 1394, whether you are sending digital video, digital audio or whatever, there are some unique things which you have to take care of with digital audio and MIDI — not least their timing relationship to one another — which are best dealt with via a proprietary chipset. So we developed a chipset specifically for mLAN, which has the additional advantage that small companies need to do less work and development to support mLAN than if we simply told them how to encode the signals for transmission over 1394. Also, if MIDI data and digital audio are transmitted side‑by‑side, this makes the LSI chip needed to do the job cheaper, allowing manufacturers both small and large to include the technology in their systems without a significantly higher cost to the end user."

A Little Background

Figure 1 — The mLAN protocol stack.

Figure 1 — The mLAN protocol stack.

To understand exactly how mLAN works and how it fits into the greater scheme of IEEE 1394, it is helpful to understand the Protocol Stack (see Figure 1 below) which makes clear the relationship of each of the sub‑protocols to one another and also the level of the overall protocol which they occupy. Before you study it in detail, however, I'd better explain some basic terms.

Traditional interface protocols fall into two types, depending on how much data they need to send and how time‑critical it all is. MIDI, for example, has relatively little data to send compared to something like digital audio (where there are upwards of 44,100 pieces of 16‑bit information per second), and is an example of the much more common asynchronous type, where accurate delivery of the information is prioritised over the time of arrival. If a packet of data is lost, an asynchronous interface will retransmit it even if it makes subsequent packets late. This means that there is no guarantee on the time of arrival. This interface type is ideal for data transfers like bulk MIDI SysEx or sample dumps, where unless you want the entire file to be compromised, it is vital that every single packet is received and verified. The disadvantage is that transmission of sample files is seriously non‑real time (typically several minutes for a few seconds). Most interfaces we use on computers are like this; they only send data when something needs to be communicated, like a MIDI Note On.

However, with real‑time digital audio and video, the data stream is huge and it is far more important that transmission is uninterrupted than 100 percent accurate (after all, which annoys you more: stuttering audio or the odd click?). For this type of transfer, an isochronous protocol is much better. This guarantees the time of arrival of each piece of information as well as the bandwidth available at any given point by sending a packet of data at given time intervals, whether or not there is anything to put in it. The disadvantage is that if a piece of data is lost, there is no time to go back and retransmit it, because the moment when it is needed is already past. All the digital audio interfaces with which you may be familiar (S/PDIF, AES‑EBU, ADAT Optical, and so on) work like this.

If you've been relating this to the diagram, you'll already have realised that the 1394 Protocol is something special, in that it allows both types of information transfer; asynchronous for control and file data, and isochronous for real‑time audio and video transfer. Yoshi uses Figure 1 in presentations about mLAN, and at the NAMM show, he took me through it.

"At the lowest level you have the 1394 hardware and cables, known as FireWire, or iLink by Sony. This is divided into two separate transmission paths, the asynchronous transmissions which pass via the Function Control Protocol — or FCP — and the isochronous transmissions which pass via the Common Isochronous Packet, or CIP.

"The Function Control Protocol is used to send the AV/C — a set of control commands for AV devices — and other upper‑layer protocols based on asynchronous transmission, which I'll come back to later. Digital Video and MPEG data, however, is sent via the isochronous route. This is also how the Audio and MIDI Transmission Protocol — that is, the mLAN part — is sent. The header of each packet from the CIP clearly states whether the packet contains DV, MPEG or Music & Audio data."

Managing mLAN

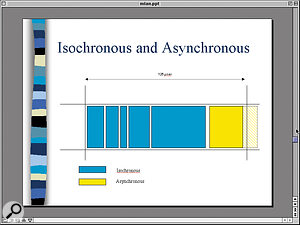

Figure 2 — The split between Isochronous and Asynchronous data in an mLAN data packet, expressed graphically.

Figure 2 — The split between Isochronous and Asynchronous data in an mLAN data packet, expressed graphically.

"In fact, what we originally developed under the name mLAN has officially passed a Publicly Available Specification — or PAS — vote by the IEC and is on the way to becoming an international standard. It is now officially designated IEC 61883‑6, and known as the Audio and Music Data Transmission Protocol for the IEEE 1394 medium. Obviously, people will still continue to refer to it as mLAN, as it is so much shorter and easier to say, but we will probably call it the A/M protocol from now on. This is because at Yamaha we are starting to use 'mLAN' to refer to the higher level of specific functions which will sit on top of the Protocol Stack [see Figure 1 again]. Yamaha refers to this higher level as the mLAN Connection Management and it will allow people to get even more out of the network. The best way to look at the difference between them is that the A/M protocol allows you to have multiple channels of audio, while mLAN Connection Management, as the name suggests, manages each stream of audio and MIDI.

"I envisage these mLAN Specific Functions, as they are called, fulfilling three roles, all of which apply to the entire network on both the asynchronous and isochronous side, and not just to the Audio & MIDI Transmission Protocol.

"Firstly, when using multiple streams inside the isochronous data path, you will be able to set up different routings for audio and MIDI data within those streams. This will allow much more flexible configurations of the network without generating more streams than are actually needed for the amount of material that is passing across the network."

If you're lost, there's a good analogy to help understand Yoshi's point here: in the early days of MIDI, when very few instruments were multitimbral and only responded on one MIDI channel each, you could end up using a lot of MIDI busses to only trigger a small amount of music. But if you configured your system well, you could assign each instrument to a different channel and then daisy‑chain them via your Ins and Thrus, so it only required a single MIDI Out generating one 16‑channel buss. With mLAN, this daisy‑chaining is all that is required anyway, but with careful routing, you can keep the number of streams (or virtual 'cables') to a minimum, and so make the network more efficient. Back to Yoshi:

"Secondly, connections and configurations which have been laboriously set up by the user for a specific system or situation can be automatically re‑established on power‑up, saving the need to completely reconfigure the system each time it is powered down and up again."

This can be seen as a sort of system configuration memory, and opens up the possibility of 'user presets', defining the configuration of the network differently for each individual user or task that the network supports.

"Thirdly, you can automate the single most problematic aspect of digital audio networks: the assignment and management of the audio synchronisation pathway. That is, which devices act as the master and generate the word clock, and how the clock is passed on reliably to the other devices in the system. A typical example of this might be the temporary changes in the sync hierarchy needed when bringing in some additional audio material from a source with no word clock input capability, before returning to the standard recording or mixdown configurations. The mLAN Specific Functions would be able to automate this process, taking the burden off the shoulders of the network user."

My thanks go to Yoshi Sawada for taking the time during a busy trade show to talk to me about some of the more advanced aspects of the Music and Audio protocol. In Part 3, next month, we will be taking a closer look at some of the mLAN products which have already been announced and examining the roles they will fulfill in an mLAN network.

Performance Matters

Yoshi Sawada: "The most common question we get asked about mLAN is how many channels of audio data it will be able to carry, and all our literature and publicity on this subject has been left deliberately vague, for the following reason. As I've just explained, audio and MIDI takes the isochronous path. Now, we can use up to 80 percent of the total bandwidth available on the 1394 buss for isochronous data transmission [see Figure 2, which shows the percentages of the 1394 data packet used for isochronous and asynchronous data transmission]. Assuming we use the 200Mbps version of 1394, we can allocate up to around 2400 bytes for one packet of isochronous transmission. To achieve audio data transmission at 48KHz, for instance, each packet of isochronous data must contain six samples for each and every channel of audio we want to send, and each sample of audio data in the A/M Protocol requires four bytes. That means that the each audio channel will need 24 bytes per packet.

"Dividing 2400 bytes by 24, we find we can put roughly 100 channels of audio data over the 200Mbps version of 1394. It should be noted, however, that this calculation ignores the isochronous header and some other overheads. So the actual number of channels at 200Mbps works out at a little less than 100.

"What about MIDI over 1394? Well, each mLAN datastream — equivalent to one audio channel — can carry the equivalent of eight MIDI busses, that is 128 channels of MIDI data. So theoretically, we can accomodate around 800 MIDI busses — or 12,800 channels. If you use the 400Mbps version of 1394, the number of available channels for audio and MIDI would be doubled.

"However, this spec only applies when you have one audio transmitter and one receiver on a single 1394 buss. If you have more audio devices — or any other devices, for that matter — on the buss, then arbitration overhead becomes an issue. In practice, this could reduce the audio channel capacity to around 50 at 200Mbps. But this is still an awful lot of channels down a single cable!"