Once you’ve recorded, edited and comped your vocals, a little careful prep work could pay real dividends when it comes to the mix.

If your song has a lead vocal, will almost always be the most important element in the mix: it’s the conversation that forms a bond between performer and listener, the teller of the song’s story, and the focus to which other instruments give support. You already understand that, of course — which is why you made the effort to find the perfect mic for your voice, record some great takes, and even do a little comping to leave you with the best possible performance. Now you’re ready to get busy with the mix... right?

Actually, just having this wonderful performance in your DAW doesn’t mean it’s ready to mix. In fact, in my experience, there’s plenty more you can do to your recorded vocals to maximise your chances of achieving a great end result. So, in this article I’ll take you step by step through my own approach to this mix‑prep stage for vocals. It won’t be a comprehensive discussion of vocal prep‑work, but what’s here works well for me — and I hope it will help you too. Next month, I’ll conclude this two‑part mini‑series with some tips for handling your vocals in the mix itself.

Taking The Hiss

Once I’ve edited and comped my ‘perfect take’, I bounce the vocal into one long track that lasts from the start of the song to the end, and the first thing I then reach for is a noise removal tool. Yes, really!

The freeware ReaFIR plug‑in is included with Cockos Reaper DAW.Ideally you won’t have much noise to contend with in the first place, and it’s true that the better the recording, the less you’ll need to do. But I find there’s always some degree of preamp hiss, hum, or other unwanted noise, particularly if you’re recording in a room in your home rather than a dedicated studio. Tackling such issues at the outset makes for a more ‘open’ vocal sound — and one that will respond better to whatever processing you’ll end up throwing at it in the mix, rather like removing a layer of dust from a painting will make it more striking.

The freeware ReaFIR plug‑in is included with Cockos Reaper DAW.Ideally you won’t have much noise to contend with in the first place, and it’s true that the better the recording, the less you’ll need to do. But I find there’s always some degree of preamp hiss, hum, or other unwanted noise, particularly if you’re recording in a room in your home rather than a dedicated studio. Tackling such issues at the outset makes for a more ‘open’ vocal sound — and one that will respond better to whatever processing you’ll end up throwing at it in the mix, rather like removing a layer of dust from a painting will make it more striking.

There are lots of noise removal tools but if you don’t own one, check to see if your DAW has something suitable. For instance, Cockos Reaper includes the ReaFIR plug‑in (that’s also available as a free VST plug‑in for other DAWs, as part of the ReaPlugs bundle). Alternatively, you could export it for processing in a stand‑alone program — Magix Sound Forge, Adobe Audition and Steinberg Wavelab are among the audio editors that have built‑in noise reduction algorithms, and depending on the nature of the noise, various non-linear editing video apps such as Final Cut Pro may have something that will do the job. But if you plan on doing this stuff a lot it’s probably worth investing in a dedicated restoration program like iZotope’s RX.

iZotope’s RX6 is one of the best denoising plug‑ins, offering various different tools for zapping different noises in your vocals, but any ‘noise print’ noise‑removal tool — including the freeware Cockos’ ReaFIR — could help you remove background hiss.

iZotope’s RX6 is one of the best denoising plug‑ins, offering various different tools for zapping different noises in your vocals, but any ‘noise print’ noise‑removal tool — including the freeware Cockos’ ReaFIR — could help you remove background hiss.

The typical procedure with most of these tools is to capture a ‘noise print’ by finding a section of the recording that consists only of noise, save that as a reference sample, then instruct the program to subtract anything with those characteristics from the vocal. But you have to be careful both in capturing the noise print, and in how far you take the processing.

With that in mind, make sure you sample nothing but the noise you’re targeting; you absolutely mustn’t have any of the vocal, or any other sounds in there. You need only a few hundred milliseconds, and if you get into the practice of capturing a little ‘silence’ through the mic when recording (and ensure you keep some of it if trimming and exporting your files) this will be easy.

Note that it’s very, very easy to apply too much noise reduction — attenuation of 6‑10 dB should be enough for our purposes. Otherwise you’ll almost certainly remove parts of the vocal itself and be lumbered with unwanted ‘chirpy’ artifacts. Both of these will leave you with an artificial sound that’s far worse than leaving the noise alone!

Silence Is Golden

After reducing the overall hiss level, I go through the file and delete all the sections of silence in between vocal passages. Why? Well, the voice will mask any noise when it’s present, and when there’s no voice there will be no noise at all from headphone leakage, mouth noises, mic handling noise and so forth.

One option is to use a noise gate to remove audio that’s below a specified level. While this semi‑automated process saves time, some programs offer more sophisticated offline alternative facilities, such as Pro Tools’ Strip Silence command (most DAWs now have an equivalent). Like a gate, this identifies the areas below a certain threshold and trims out the silence for you. Some tools let you alter the level, reducing the volume of sections below the threshold anywhere from slight attenuation to minus infinity (ie. complete silence). And some give you the option of automatic crossfading between the processed and unprocessed sections, helping to ensure a less abrupt transition.

Here, the spaces between phrases and words have been cut, with fades to add smooth transitions.

Here, the spaces between phrases and words have been cut, with fades to add smooth transitions.

Handy as these processes can be for some sources, though, the most flexible and effective approach for vocals is, alas, the most tedious: making these edits manually. Cut the spaces between vocals, then add fade‑ins and fade‑outs to smooth the transition from vocal to silence. Also, consider inserting a steep high‑pass filter (around 48dB per octave if available) to cut the low frequencies below the voice where subsonics, hum, mud and p‑pops live.

On sustained notes with doubled vocals you can line up the fade‑outs on the two vocal tracks, so they fade out together. This results in a tighter, more cohesive, vocal sound. You could also try using your DAW’s audio‑warp feature if one syllable/note is too short, rather than too long. As always, you must listen out for unwanted artifacts; warping can not only introduce unwanted sounds when you take it too far, but it can also move transitions between vocal sounds that need to remain at a certain point in time to feel natural.

Every Breath You Take

Breath inhales are a natural part of the vocal process, so removing these entirely will leave you with an unnatural‑sounding vocal. For example, an obvious inhale can cue the listener that the subsequent vocal section is going to be a little more intense.

That said, in most popular genres, compression will be applied to vocal parts during the mix. Applying any compression will, relative to the vocal itself, bring up the levels of any lower‑level artifacts such as breaths, possibly to the point of being objectionable. To reduce the level of inhales, I find it’s most effective to define the region with the inhale and reduce the gain by 3‑7 dB or so. Depending on your DAW and the plug‑ins you have available, this can be done as an off‑line process, a clip‑based insert, via a clip envelope or using pre‑insert level automation. This amount of reduction will be enough to retain the inhale’s essential character, but make it less obvious compared to the vocal.

The section with the inhale has been split into a separate clip, with a clip gain envelope reducing the inhale’s level by about 7dB.

The section with the inhale has been split into a separate clip, with a clip gain envelope reducing the inhale’s level by about 7dB.

If you have the time and inclination, another approach is to cut all the breaths out and move them to an adjacent DAW track. Then you can use that track’s fader and automation to set the breath levels very precisely, though note that you’ll have to route both that track and the original part to a shared bus if you want to apply the same vocal processing. By the way, exactly the same technique can be used to reduce essing.

Ex‑plosives

Plosives almost always require attention. The brute force method is to apply a steep high‑pass filter that removes low frequencies where plosives like ‘p‑pops’ occur (similarly, a high‑cut filter will remove ‘wind’ frequencies). But a more refined option is to apply the low‑cut filtering only where the plosives occur. Again, you can achieve this in a couple of ways: either separating out the pop and applying a filter to the clip/region, or automating a filter’s cutoff frequency. The latter can sound smoother. It’s worth mentioning that if you don’t have steep filter options available in your DAW, you can create steeper ones by simply chaining multiple high‑pass filters in series.

High‑pass filters come with a health warning, though: if you reduce the lows sufficiently to completely remove the pop, you’ll usually thin the voice somewhat too. So a better, albeit more time‑consuming, option is to zoom in on the p‑pop (it will have a distinctive waveform that you’ll soon learn to recognise) and split the clip just before the pop. Then, simply add a fade‑in over the p‑pop. The fade‑in’s duration determines the pop’s severity, so you can fine‑tune the desired amount of ‘p’ sound.

Here we’re splitting a clip just before a pop, then fading in to minimise the unwanted effect.Pops aren’t the only problem — there are some other annoying mouth noises that consist of short, ‘clicky’ transients. To fix these, you can usually zoom in, cut the transient, and drag the section following the transient across the gap to create a crossfade. If that doesn’t work, try copying and pasting some of the adjoining signal over the gap. Choose an option that mixes/crossfades the signal with the area you removed; overwriting might produce an audible discontinuity at the start or end of the pasted region.

Here we’re splitting a clip just before a pop, then fading in to minimise the unwanted effect.Pops aren’t the only problem — there are some other annoying mouth noises that consist of short, ‘clicky’ transients. To fix these, you can usually zoom in, cut the transient, and drag the section following the transient across the gap to create a crossfade. If that doesn’t work, try copying and pasting some of the adjoining signal over the gap. Choose an option that mixes/crossfades the signal with the area you removed; overwriting might produce an audible discontinuity at the start or end of the pasted region.

Phrase‑ology

Unless you have the mic technique of KD Lang, the odds are short that some phrases in your recording will be softer than others. I don’t mean intentionally softer, due to natural dynamics, but accidentally, whether as a result of poor mic technique, running out of breath, or an inability to hit one note as strongly as others.

Many people would suggest you reach for a compressor. But if you apply compression, the low‑level sections might not be affected very much, whereas the high‑level ones will sound ‘squashed’. So, while compression certainly has its place, I find that it’s better to edit the vocal level, phrase by phrase (a phrase being a group of words; I’m not talking about individual words or syllables), to achieve a more broadly consistent level before applying any compression; this will retain more overall dynamics. If you need to inject an element of expressiveness later on that wasn’t in the original vocal (eg. the song gets softer in a particular place, so you need to make the vocal softer), you can do this with judicious use of level automation.

Unpopular opinion alert! Whenever I mention this technique, self‑appointed ‘audio professionals’ complain in forums that I don’t know what I’m talking about, because ‘no real engineer ever uses normalisation’. However, this isn’t about normalising to make everything loud; no law says you have to normalise to 0dBFS, and you can normalise to any level you like. For example, if a vocal is too soft but part of that is due to natural dynamics, you can normalise to, say, ‑3dB or so in relation to the rest of the vocal’s peaks. (On the other hand, with narration I often do normalise everything to as consistent a level as possible, because most dynamics with narration occurs within phrases.)

Furthermore, most DAWs will offer some way of normalising to an RMS (or even LUFS) value rather than a peak one, and this can be a better option sometimes, though personally I find peak normalisation tends to be fine; I’m a pretty consistent singer (my distance from the mic tends to reduce any big ‘blobs’ of energy), and it’s always given the results I’ve wanted. In addition I sometimes use clip‑gain or pre‑insert gain automation to balance clips, or bring up something like the end of a note.

In the lower waveform, the sections in lighter blue have been normalised — these sections have a higher peak level than the same sections in the upper waveform.The advantage of the normalisation technique is that you’ve neither added any of the artifacts that often go hand in hand with compression, nor interfered with a phrase’s ‘internal dynamics’. So even though the sound is more present and consistent it sounds completely natural. Furthermore, if you later decide to apply compression or limiting while mixing, you won’t need to use as much as you normally would to obtain the same degree of perceived volume.

In the lower waveform, the sections in lighter blue have been normalised — these sections have a higher peak level than the same sections in the upper waveform.The advantage of the normalisation technique is that you’ve neither added any of the artifacts that often go hand in hand with compression, nor interfered with a phrase’s ‘internal dynamics’. So even though the sound is more present and consistent it sounds completely natural. Furthermore, if you later decide to apply compression or limiting while mixing, you won’t need to use as much as you normally would to obtain the same degree of perceived volume.

However, do be careful not to get so involved in this process. If you start normalising, say, individual words within any given phrase, you will definitely end up losing some dynamics that you want to retain.

A side‑benefit of phrase‑by‑phase normalisation is you can define a region that starts just after an inhale, so the inhale isn’t brought up with the rest of the phrase. If your DAW does automatic crossfades when processing a section of the audio, you can often get away with applying normalisation even if the region boundary occurs on existing audio. Before you commit to this type of edit, though, listen to the transition to make sure there’s no click or other discontinuity. If there is, you’ll need to split the section to be normalised, then crossfade it with adjoining regions.

Melodyne’s magical properties extend to normalising volume levels.Note that you can also do phrase‑by‑phrase normalisation with any version of Melodyne above Melodyne Essential. Open up the vocal that needs fixing in Melodyne, then choose the Percussive algorithm. In this mode, the ‘blobs’ represent individual words or, in some cases, phrases. Select the Amplitude tool.

Melodyne’s magical properties extend to normalising volume levels.Note that you can also do phrase‑by‑phrase normalisation with any version of Melodyne above Melodyne Essential. Open up the vocal that needs fixing in Melodyne, then choose the Percussive algorithm. In this mode, the ‘blobs’ represent individual words or, in some cases, phrases. Select the Amplitude tool.

Click on a blob, and drag higher to raise the level, or lower to decrease the level. In this way, you can create a smooth vocal line with consistent levels. And don’t forget that you can split blobs if you need more control. For example, it may be just the end of a word that needs a level increase.

Again, you need to remain alert to potential problems. In particular, don’t try to increase the levels beyond your available headroom; and do be aware that some blobs might be a breath inhale or plosive — you don’t want to raise those, so listen while you adjust.

Successful De‑essing

There’s an increasing trend in commercial tracks toward brighter vocals. While that can improve articulation it often over‑emphasises ‘s’ sounds. Using a de‑esser to tame these prior to other processing can keep the ‘s’ sounds from being too shrill.

Reduced to its bare essentials, most de‑essers are compressors that affect only high frequencies. A multiband compressor or a dynamic EQ can also do de‑essing, but a dedicated de‑esser will usually take less time to adjust. (By the way, a de‑esser can do more than take care of only high sounds. I recently mixed a song that had an overly loud vocal ‘shh’ sound that didn’t reach particularly high frequencies, but by setting a de‑esser to its lowest frequency I was able to reduce it.)

The Waves Renaissance De‑Esser: one of many available de‑esser plug‑ins.Most de‑essers have an option to let you hear only what will be reduced by the de‑esser, typically called something like a ‘listen’, ‘audition’ or ‘side‑chain’. There will also be controls for setting the frequency at which the de‑essing occurs, and the depth of frequency removal. With the listen function enabled, sweep the frequency control until you hear the sound you want to minimise, then adjust the depth control for the desired amount of ‘s’ reduction.

The Waves Renaissance De‑Esser: one of many available de‑esser plug‑ins.Most de‑essers have an option to let you hear only what will be reduced by the de‑esser, typically called something like a ‘listen’, ‘audition’ or ‘side‑chain’. There will also be controls for setting the frequency at which the de‑essing occurs, and the depth of frequency removal. With the listen function enabled, sweep the frequency control until you hear the sound you want to minimise, then adjust the depth control for the desired amount of ‘s’ reduction.

After turning off the listen function, check the vocal in context with the rest of the tracks. You may be surprised how even a little bit of de‑essing can sound like too much when the full vocal is happening, so reduce the de‑essing depth if needed.

If you find that it’s hard to set the de‑esser to take enough of the esses away without leaving things sounding lispy, or removing other bright parts of the vocal, then consider stacking two de‑essers in series, each one taking off just a little bit at a time — or taking the time to use a dynamic EQ, or cutting the esses away to an adjacent track, as discussed above in relation to de‑breathing. If you want to get really precise, you can copy (not cut) the esses to a separate track, and invert the polarity of the ess track. Then bring it’s fader down to minus infinity (full attenuation) and automate the level upward. As the inverted esses mix with the original esses, they’ll increasingly phase cancel. (Though note that any further processing you add will have to be applied to the two tracks together, so you’ll want to route them to a group bus.)

Pitch Perfect

By this stage, your vocal should sound cleaner, with a more consistent level, and any annoying artifacts have been tamed — all without detracting from the natural qualities of the vocal performance. With this solid foundation to build on you can start work on more elaborate processes, such as pitch‑correction.

Yes, the critics are right: pitch‑correction can suck all the life out of vocals. I once proved this to myself accidentally when working on some background vocals. I wanted them to have an angelic, ‘perfect’ quality; because the voices were already very close to proper pitch anyway, I thought just a tiny bit of manual pitch‑correction would give the desired effect. I was totally wrong, because the pitch‑correction simply took away what made the vocals interesting in the first place — the tiny variances in pitch between the voices. Although an epic fail as a sonic experiment, it was a valuable lesson, because it caused me to start analysing vocals, and to learn what makes them interesting and what pitch‑correction can take away.

And that’s when I found out that the critics are also totally wrong! Because pitch‑correction, if it is applied selectively, can enhance vocals tremendously, without anyone ever suspecting the sound had been corrected. Pitch‑corrected vocals needn’t impart a robotic quality.

The examples here reference Celemony Melodyne, but other programs like Waves Tune, iZotope Nectar, Synchro Arts ReVoice Pro and of course the grand‑daddy of them all, Antares Auto‑Tune, all work similarly. Many DAWs now have a similar facility built in too, including Logic’s Flex Pitch and Cubase’s VariAudio, and some others ship with a version of Melodyne. Once these clever tools have analysed the vocal, they display the note pitches graphically (much like the piano roll view for MIDI notes). You can generally quantise the notes to a particular scale with ‘looser’ or ‘tighter’ correction, and can often correct timing and formant as well as pitch. But most importantly, with most pitch‑correction software you can turn off automatic quantising, and instead correct the pitch manually, by ear — which I think of as being able to do surgery with a scalpel instead of a machete!

Pitch‑correction works best on vocals that are ‘raw’, without any processing. Modulation and time‑based effects can make pitch‑correction glitchy at best, and at worst impossible. Even EQ that emphasises the high frequencies can create unpitched sibilants that confuse pitch‑correction algorithms. Aside from the level‑based DSP processes mentioned previously, the only processing that’s ‘safe’ to use on vocals prior to employing pitch‑correction is de‑essing. If your pitch‑correction processor inserts as a plug‑in (eg. iZotope’s Nectar), then make sure it’s before any other processors in the signal chain. Occasionally, a little targeted corrective EQ, just to tame unwanted resonances, might help the pitch processor’s algorithms better identify a note’s pitch, but that tends to be the case less with vocals than with some other sources (eg. bass or electric guitars).

The key to natural‑sounding pitch‑correction is simple: listen before you correct, and don’t correct anything that doesn’t sound wrong. Manual correction takes more effort to get the right sound (you’ll become best friends with your program’s Undo button), but the human voice simply does not work the way pitch‑correction software does when set to auto‑pilot.

Melodyne displays the slides from one note to another (circled in green) as well as the pitch associated with the note.One of my synth‑programming tricks on choir and voice patches is to add short, subtle, upward or downward pitch shifts at the beginning of phrases. Singers rarely go from no sound to perfectly pitched sound, and the shifts add a major degree of realism to patches. Pitch‑correction has a natural tendency to remove or reduce these shifts, which is partially responsible for pitch‑corrected vocals sounding ‘not right’. It’s crucial not to ‘correct’ any such slides that contribute to a vocal’s urgency.

Melodyne displays the slides from one note to another (circled in green) as well as the pitch associated with the note.One of my synth‑programming tricks on choir and voice patches is to add short, subtle, upward or downward pitch shifts at the beginning of phrases. Singers rarely go from no sound to perfectly pitched sound, and the shifts add a major degree of realism to patches. Pitch‑correction has a natural tendency to remove or reduce these shifts, which is partially responsible for pitch‑corrected vocals sounding ‘not right’. It’s crucial not to ‘correct’ any such slides that contribute to a vocal’s urgency.

The Ups & Downs Of Vibrato

Sometimes vibrato tends to run away from the pitch, and the variations become excessive. With Melodyne, the key to fixing this is the Note Separation tool. (Incidentally, don’t assume this is unavailable in Melodyne Essential just because there’s no button for it; if you hover just over the top of a note, the note separation tool will appear as a vertical bar cursor with two arrows.)

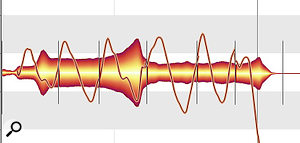

Separating the individual cycles in vibrato can produce a smoother, more consistent, vibrato that still sounds natural.Let’s fix a note with pretty ragged vibrato (shown at the top in the screenshot, right). Use the note separation tool to separate the blob at each cycle of vibrato (middle). The blobs have changed pitch because the pitch is based on the blob’s average pitch. Cutting the note into smaller blobs defines the average pitch more precisely. Note that you can also use splitting to improve the pitch‑correction performance — vowels are more about carrying pitch than consonants, so you’ll often get more accurate results if they’re separated from the consonants.

Separating the individual cycles in vibrato can produce a smoother, more consistent, vibrato that still sounds natural.Let’s fix a note with pretty ragged vibrato (shown at the top in the screenshot, right). Use the note separation tool to separate the blob at each cycle of vibrato (middle). The blobs have changed pitch because the pitch is based on the blob’s average pitch. Cutting the note into smaller blobs defines the average pitch more precisely. Note that you can also use splitting to improve the pitch‑correction performance — vowels are more about carrying pitch than consonants, so you’ll often get more accurate results if they’re separated from the consonants.

Finally, correct the pitch centre and pitch drift (the screenshot shows 100 percent correction). Check out the right‑most blob; it’s jumped up a semi‑tone because Melodyne thinks it’s a different pitch now that it’s been cut, so drop it down a semi‑tone and you’re done — the vibrato now sounds much more consistent.

That’s as far as you can go with Melodyne Essential, but if you have Melodyne Editor, you can call up the Pitch Modulation tool and fine‑tune the vibrato depth for greater consistency (note how the vibrato cycles have about the same modulation depth). You may see some discontinuities, but it’s more than likely that you won’t hear them.

You can adjust the amplitude of individual vibrato cycles with Melodyne’s Pitch Modulation tool.There’s yet another way to add vibrato if either a note doesn’t have any, or you’ve ‘flattened’ pitch variations with pitch‑correction: apply a vibrato plug‑in only to certain notes. You can do this by inserting the effect into a clip that consists of the part where you want vibrato, splitting that part off into a separate track with a vibrato plug‑in, or automating the bypass or wet/dry controls of a vibrato plug‑in on the main track. Even if you don’t have a vibrato processor per se, you can usually use a chorus of flanger to cobble one together that will do the job.

You can adjust the amplitude of individual vibrato cycles with Melodyne’s Pitch Modulation tool.There’s yet another way to add vibrato if either a note doesn’t have any, or you’ve ‘flattened’ pitch variations with pitch‑correction: apply a vibrato plug‑in only to certain notes. You can do this by inserting the effect into a clip that consists of the part where you want vibrato, splitting that part off into a separate track with a vibrato plug‑in, or automating the bypass or wet/dry controls of a vibrato plug‑in on the main track. Even if you don’t have a vibrato processor per se, you can usually use a chorus of flanger to cobble one together that will do the job.

You can often coax a chorus or flanger into producing vibrato if the mix can choose processed sound only.Use only a single voice of flanging or chorusing, set the balance to processed sound only, pull way back on the depth (you don’t need a lot), and set the rate for the desired vibrato speed. Even better, if the depth is automatable, you can fade the vibrato in and out for a more realistic effect. Just remember to keep it subtle, or people will spot that it’s fake.

You can often coax a chorus or flanger into producing vibrato if the mix can choose processed sound only.Use only a single voice of flanging or chorusing, set the balance to processed sound only, pull way back on the depth (you don’t need a lot), and set the rate for the desired vibrato speed. Even better, if the depth is automatable, you can fade the vibrato in and out for a more realistic effect. Just remember to keep it subtle, or people will spot that it’s fake.

All Prepped Up...

All this editing involves a fair amount of detailed work, and it can easily take an hour to optimise a complete vocal in this way — but I really do think that the results are worth it. As I said at the outset, there’s no more important element of any song than the lead vocal, and a smooth, consistent, performance that hasn’t had the life compressed out of it gives the vocal more importance.

Of course, there’s yet more to be done to enhance the vocal when it comes to actually mixing the track — but that’s a topic I’ll leave for Part 2.